4022af7e9b90775f3e9da2bc92b2352c3b485591

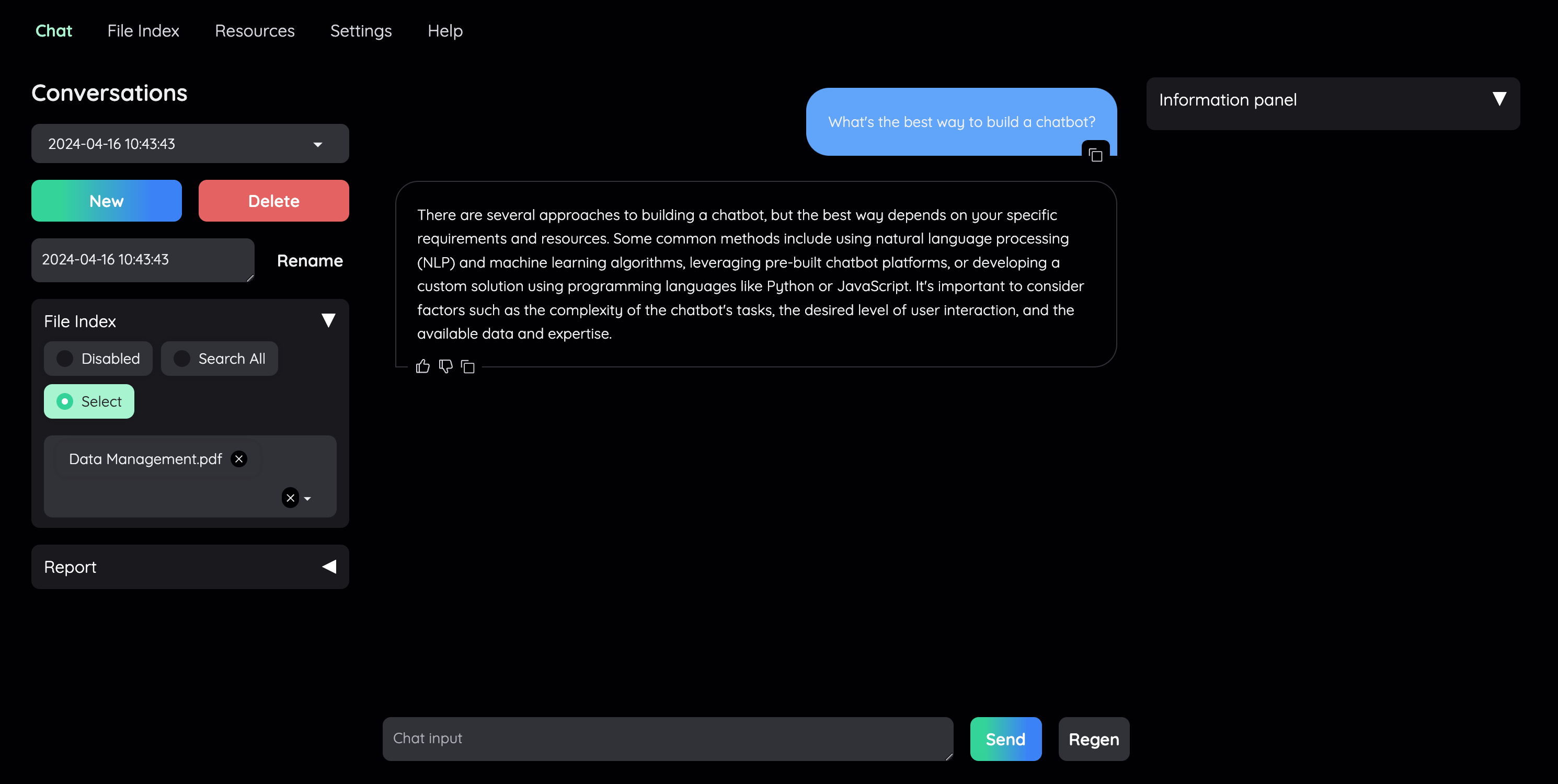

kotaemon

Build and use local RAG-based Question Answering (QA) applications.

This repository would like to appeal to both end users who want to do QA on their documents and developers who want to build their own QA pipeline.

- For end users:

- A local Question Answering UI for RAG-based QA.

- Supports LLM API providers (OpenAI, AzureOpenAI, Cohere, etc) and local LLMs

(currently only GGUF format is supported via

llama-cpp-python). - Easy installation scripts, no environment setup required.

- For developers:

- A framework for building your own RAG-based QA pipeline.

- See your RAG pipeline in action with the provided UI (built with Gradio).

- Share your pipeline so that others can use it.

This repository is under active development. Feedback, issues, and PRs are highly appreciated. Your input is valuable as it helps us persuade our business guys to support open source.

Setting up

-

Clone the repo

git clone git@github.com:Cinnamon/kotaemon.git cd kotaemon -

Install the environment

-

Create a conda environment (python >= 3.10 is recommended)

conda create -n kotaemon python=3.10 conda activate kotaemon # install dependencies cd libs/kotaemon pip install -e ".[all]" -

Or run the installer (one of the

scripts/run_*scripts depends on your OS), then you will have all the dependencies installed as a conda environment atinstall_dir/env.conda activate install_dir/env

-

-

Pre-commit

pre-commit install -

Test

pytest tests

Description

Languages

Python

91.1%

HTML

3%

Shell

2.3%

Batchfile

1.3%

JavaScript

1.3%

Other

0.9%