diff --git a/README.md b/README.md

index 1a48035..9a8b281 100644

--- a/README.md

+++ b/README.md

@@ -3,7 +3,7 @@

An open-source clean & customizable RAG UI for chatting with your documents. Built with both end users and

developers in mind.

-

+

[Live Demo](https://huggingface.co/spaces/taprosoft/kotaemon) |

[Source Code](https://github.com/Cinnamon/kotaemon)

@@ -68,7 +68,7 @@ appreciated.

- **Extensible**. Being built on Gradio, you are free to customize / add any UI elements as you like. Also, we aim to support multiple strategies for document indexing & retrieval. `GraphRAG` indexing pipeline is provided as an example.

-

+

## Installation

@@ -114,7 +114,7 @@ pip install -e "libs/ktem"

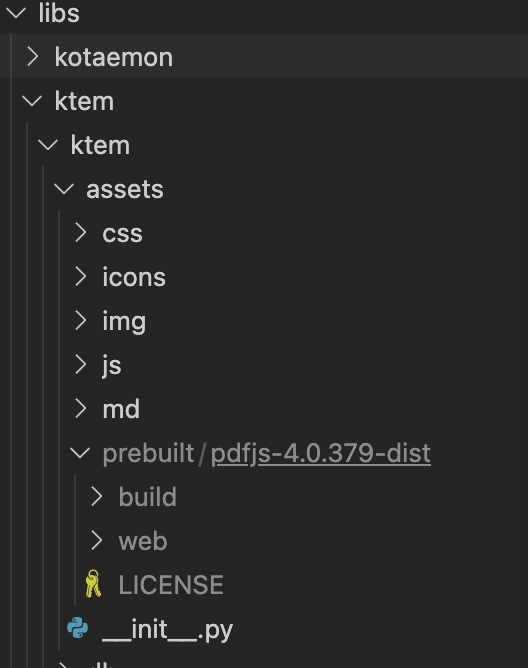

- (Optional) To enable in-browser PDF_JS viewer, download [PDF_JS_DIST](https://github.com/mozilla/pdf.js/releases/download/v4.0.379/pdfjs-4.0.379-dist.zip) and extract it to `libs/ktem/ktem/assets/prebuilt`

- +

+ - Start the web server:

@@ -128,6 +128,10 @@ Default username / password are: `admin` / `admin`. You can setup additional use

+## Setup local models (for local / private RAG)

+

+See [Local model setup](docs/local_model.md).

+

## Customize your application

By default, all application data are stored in `./ktem_app_data` folder. You can backup or copy this folder to move your installation to a new machine.

@@ -225,7 +229,7 @@ ollama pull nomic-embed-text

Set the model names on web UI and make it as default.

-

+

##### Using GGUF with llama-cpp-python

diff --git a/doc_env_reqs.txt b/doc_env_reqs.txt

index 342522a..48a321f 100644

--- a/doc_env_reqs.txt

+++ b/doc_env_reqs.txt

@@ -3,6 +3,7 @@ mkdocstrings[python]

mkdocs-material

mkdocs-gen-files

mkdocs-literate-nav

+mkdocs-video

mkdocs-git-revision-date-localized-plugin

mkdocs-section-index

mdx_truly_sane_lists

diff --git a/docs/images/index-embedding.png b/docs/images/index-embedding.png

new file mode 100644

index 0000000..13d14e1

Binary files /dev/null and b/docs/images/index-embedding.png differ

diff --git a/docs/images/llm-default.png b/docs/images/llm-default.png

new file mode 100644

index 0000000..600961c

Binary files /dev/null and b/docs/images/llm-default.png differ

diff --git a/docs/images/retrieval-setting.png b/docs/images/retrieval-setting.png

new file mode 100644

index 0000000..6ff9eae

Binary files /dev/null and b/docs/images/retrieval-setting.png differ

diff --git a/docs/local_model.md b/docs/local_model.md

new file mode 100644

index 0000000..ed5c238

--- /dev/null

+++ b/docs/local_model.md

@@ -0,0 +1,90 @@

+# Setup local LLMs & Embedding models

+

+## Prepare local models

+

+#### NOTE

+

+In the case of using Docker image, please replace `http://localhost` with `http://host.docker.internal` to correctly communicate with service on the host machine. See [more detail](https://stackoverflow.com/questions/31324981/how-to-access-host-port-from-docker-container).

+

+### Ollama OpenAI compatible server (recommended)

+

+Install [ollama](https://github.com/ollama/ollama) and start the application.

+

+Pull your model (e.g):

+

+```

+ollama pull llama3.1:8b

+ollama pull nomic-embed-text

+```

+

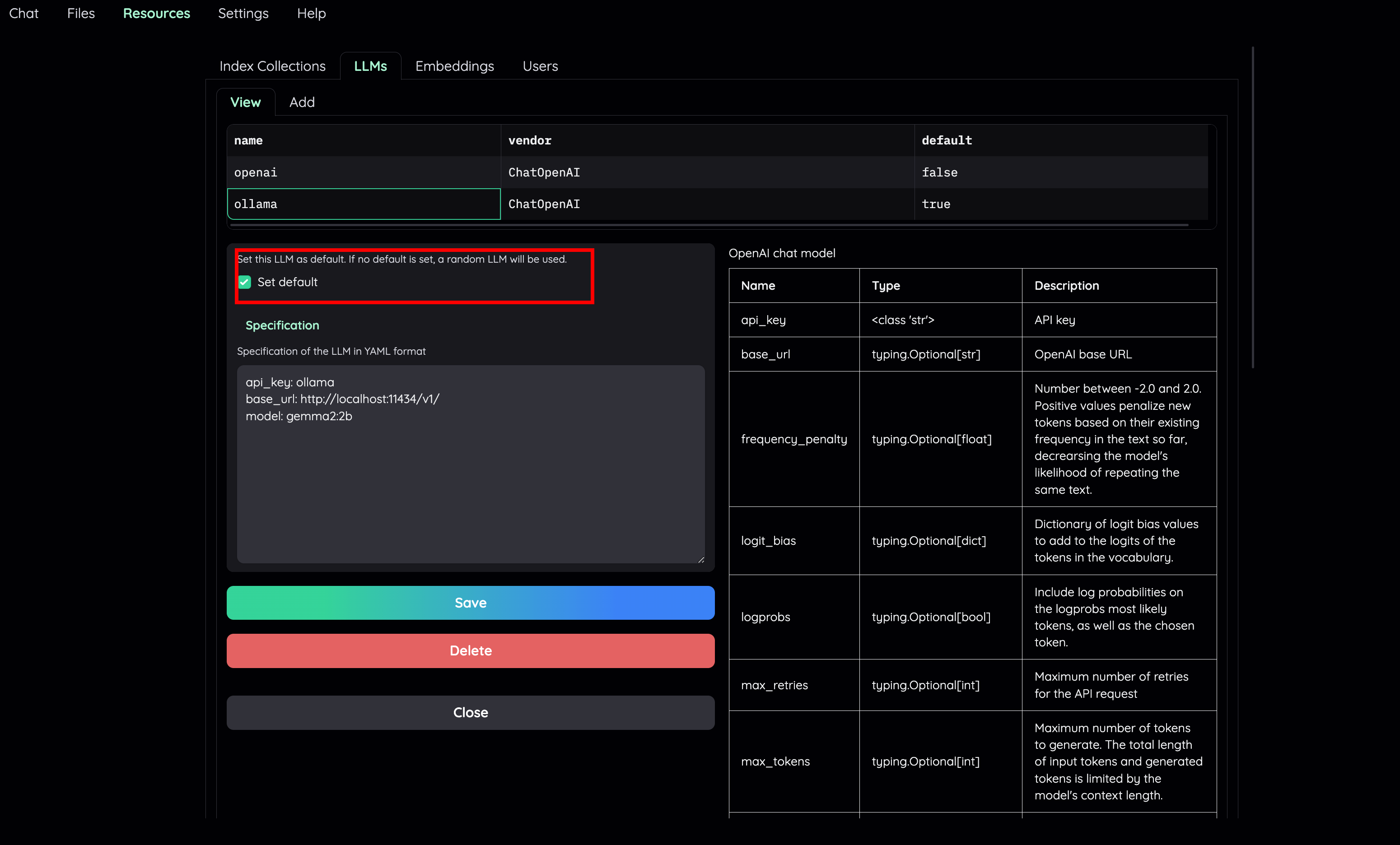

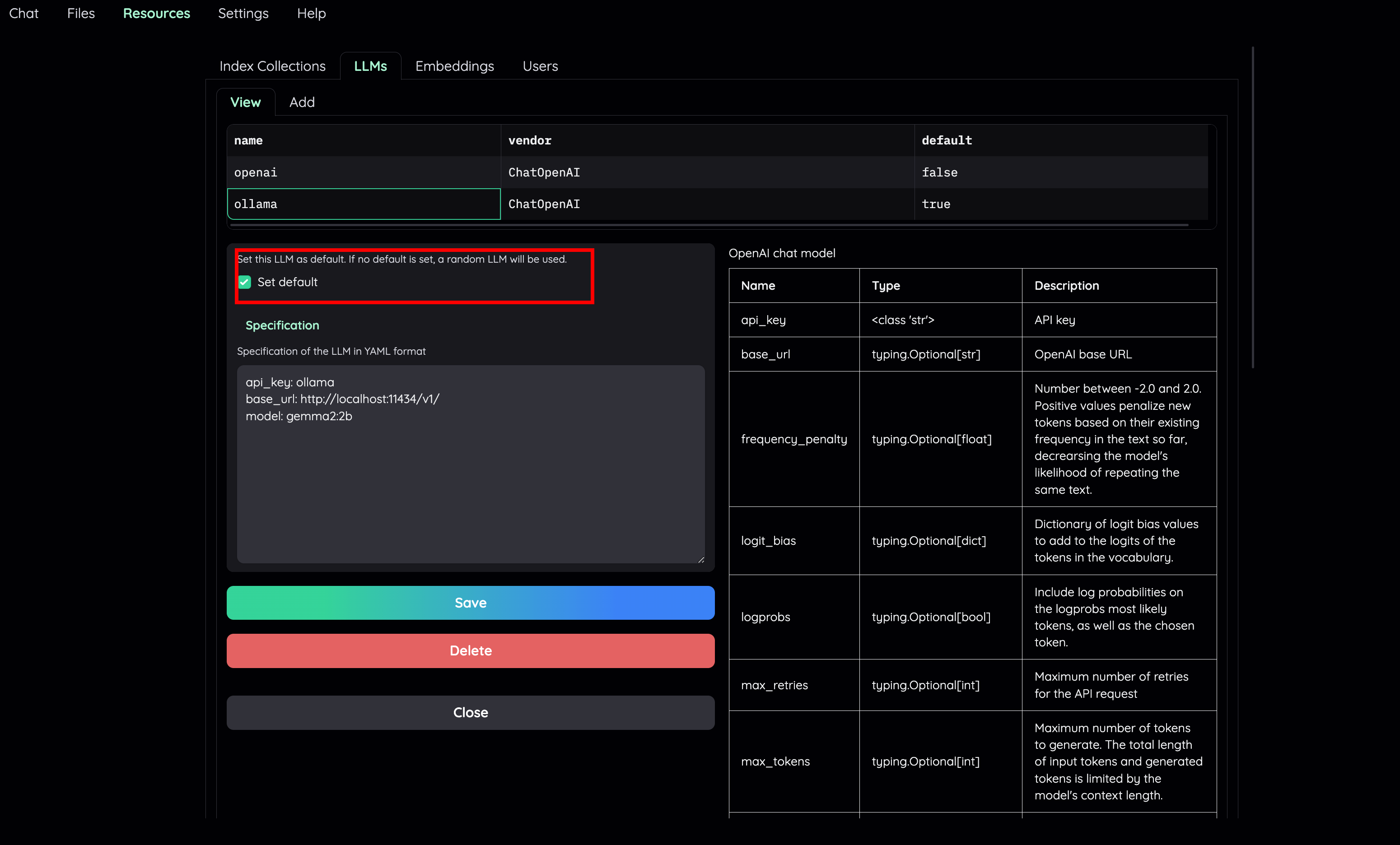

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to Ollama:

+

+```

+api_key: ollama

+base_url: http://localhost:11434/v1/

+model: gemma2:2b (for llm) | nomic-embed-text (for embedding)

+```

+

+

+

+### oobabooga/text-generation-webui OpenAI compatible server

+

+Install [oobabooga/text-generation-webui](https://github.com/oobabooga/text-generation-webui/).

+

+Follow the setup guide to download your models (GGUF, HF).

+Also take a look at [OpenAI compatible server](https://github.com/oobabooga/text-generation-webui/wiki/12-%E2%80%90-OpenAI-API) for detail instructions.

+

+Here is a short version

+

+```

+# install sentence-transformer for embeddings creation

+pip install sentence_transformers

+# change to text-generation-webui src dir

+python server.py --api

+```

+

+Use the `Models` tab to download new model and press Load.

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to `text-generation-webui`:

+

+```

+api_key: dummy

+base_url: http://localhost:5000/v1/

+model: any

+```

+

+### llama-cpp-python server (LLM only)

+

+See [llama-cpp-python OpenAI server](https://llama-cpp-python.readthedocs.io/en/latest/server/).

+

+Download any GGUF model weight on HuggingFace or other source. Place it somewhere on your local machine.

+

+Run

+

+```

+LOCAL_MODEL= python scripts/serve_local.py

+```

+

+Setup LLM model on Resources tab with type OpenAI. Set these model parameters to connect to `llama-cpp-python`:

+

+```

+api_key: dummy

+base_url: http://localhost:8000/v1/

+model: model_name

+```

+

+## Use local models for RAG

+

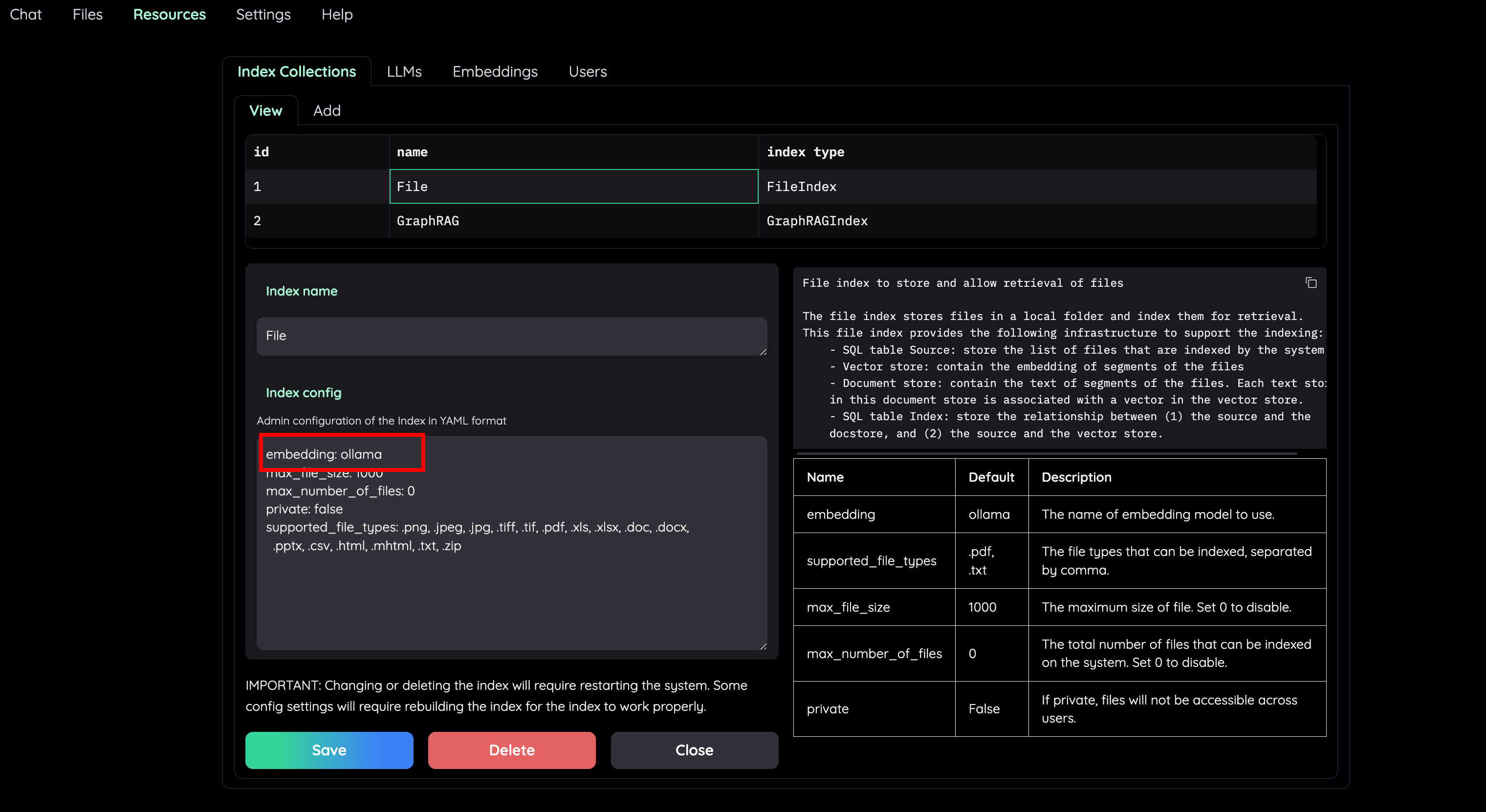

+- Set default LLM and Embedding model to a local variant.

+

+

+

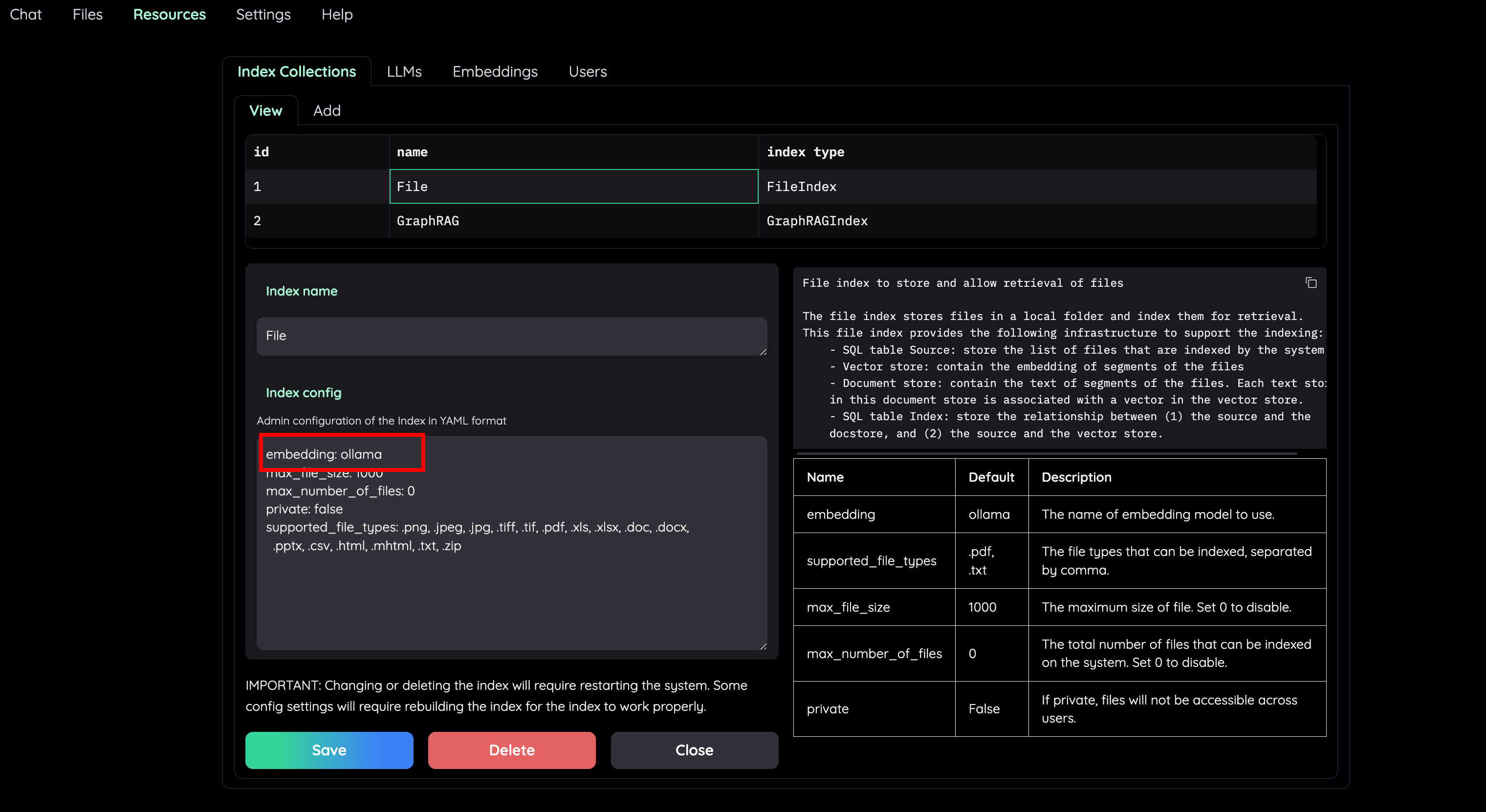

+- Set embedding model for the File Collection to a local model (e.g: `ollama`)

+

+

+

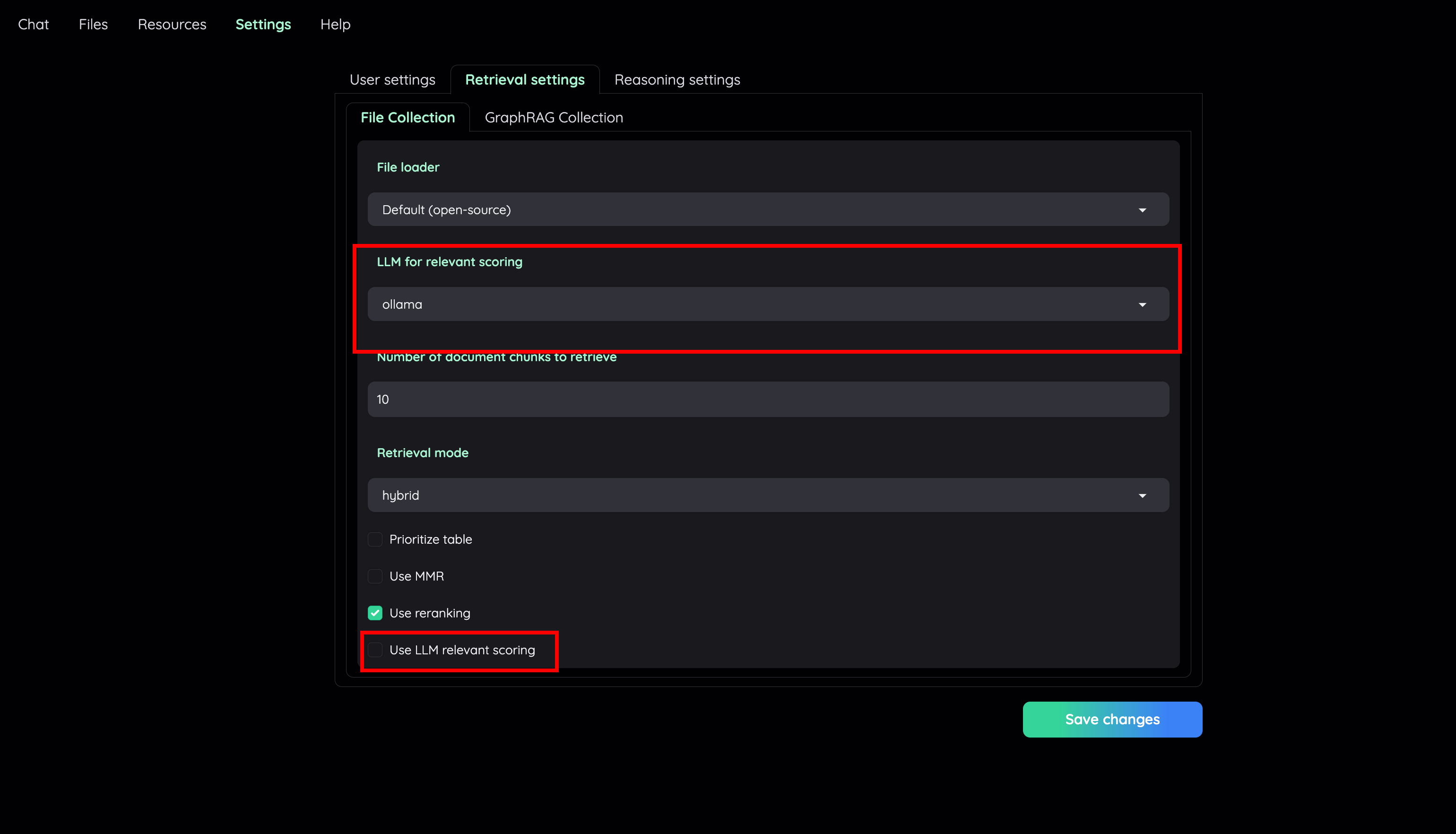

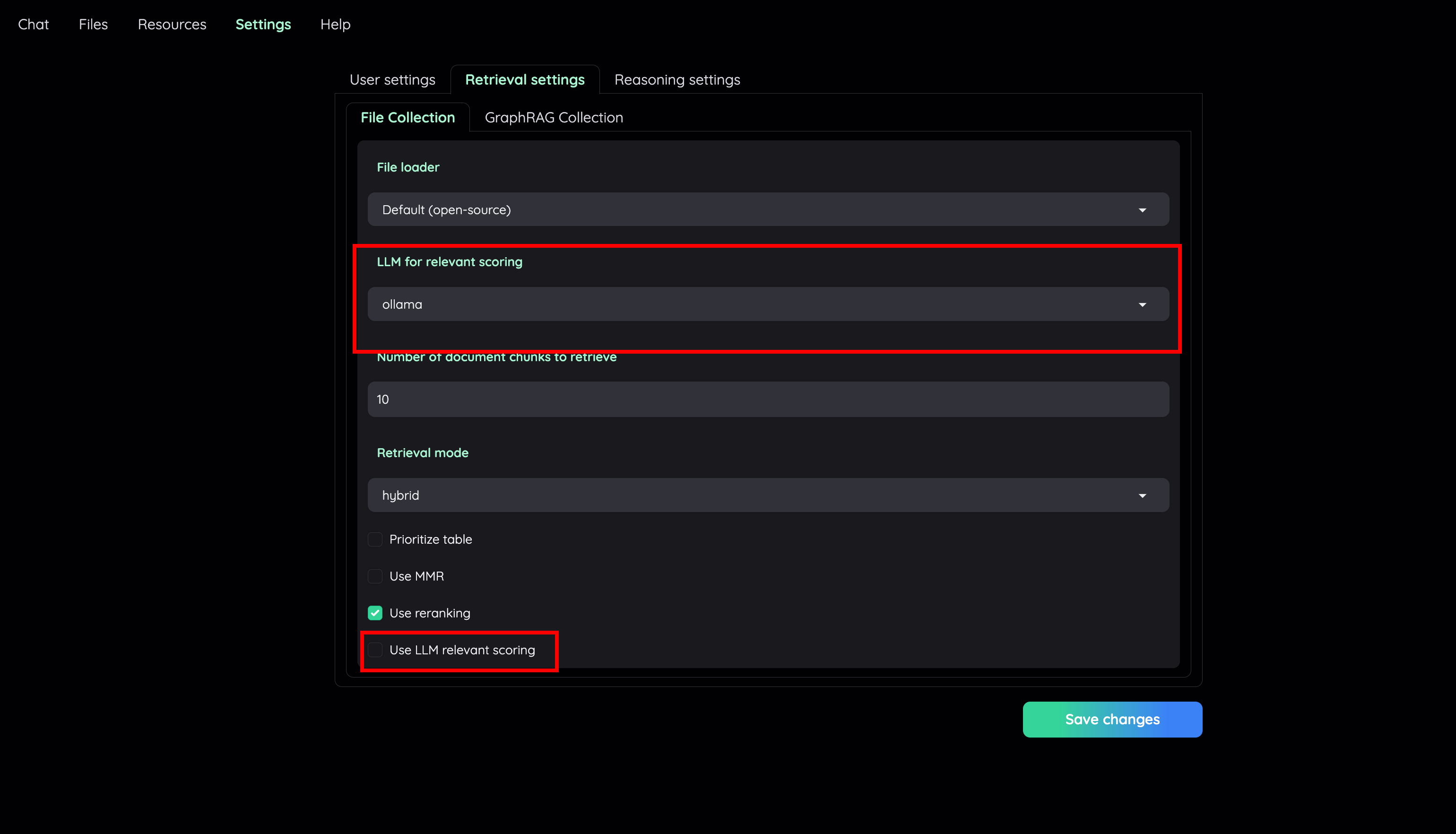

+- Go to Retrieval settings and choose LLM relevant scoring model as a local model (e.g: `ollama`). Or, you can choose to disable this feature if your machine cannot handle a lot of parallel LLM requests at the same time.

+

+

+

+You are set! Start a new conversation to test your local RAG pipeline.

diff --git a/flowsettings.py b/flowsettings.py

index aa3489c..8eaba8b 100644

--- a/flowsettings.py

+++ b/flowsettings.py

@@ -152,6 +152,7 @@ if config("LOCAL_MODEL", default=""):

"__type__": "kotaemon.llms.ChatOpenAI",

"base_url": "http://localhost:11434/v1/",

"model": config("LOCAL_MODEL", default="llama3.1:8b"),

+ "api_key": "ollama",

},

"default": False,

}

@@ -160,6 +161,7 @@ if config("LOCAL_MODEL", default=""):

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

"base_url": "http://localhost:11434/v1/",

"model": config("LOCAL_MODEL_EMBEDDINGS", default="nomic-embed-text"),

+ "api_key": "ollama",

},

"default": False,

}

diff --git a/scripts/run_linux.sh b/scripts/run_linux.sh

index 8eff787..ec2f960 100755

--- a/scripts/run_linux.sh

+++ b/scripts/run_linux.sh

@@ -74,7 +74,7 @@ function activate_conda_env() {

source "$conda_root/etc/profile.d/conda.sh" # conda init

conda activate "$env_dir" || {

- echo "Failed to activate environment. Please remove $env_dir and run the installer again"

+ echo "Failed to activate environment. Please remove $env_dir and run the installer again."

exit 1

}

echo "Activate conda environment at $CONDA_PREFIX"

@@ -143,6 +143,32 @@ function setup_local_model() {

python $(pwd)/scripts/serve_local.py

}

+function download_and_unzip() {

+ local url=$1

+ local dest_dir=$2

+

+ # Check if the destination directory exists, create if it doesn't

+ if [ -d "$dest_dir" ]; then

+ echo "Destination directory $dest_dir already exists. Skipping download."

+ return

+ fi

+

+ mkdir -p "$dest_dir"

+

+ # Download the ZIP file

+ local zip_file="${dest_dir}/downloaded.zip"

+ echo "Downloading $url to $zip_file"

+ curl -L -o "$zip_file" "$url"

+

+ # Unzip the file to the destination directory

+ echo "Unzipping $zip_file to $dest_dir"

+ unzip -o "$zip_file" -d "$dest_dir"

+

+ # Clean up the downloaded ZIP file

+ rm "$zip_file"

+ echo "Download and unzip completed successfully."

+}

+

function launch_ui() {

python $(pwd)/app.py || {

echo "" && echo "Will exit now..."

@@ -167,6 +193,11 @@ conda_root="${install_dir}/conda"

env_dir="${install_dir}/env"

python_version="3.10"

+pdf_js_version="4.0.379"

+pdf_js_dist_name="pdfjs-${pdf_js_version}-dist"

+pdf_js_dist_url="https://github.com/mozilla/pdf.js/releases/download/v${pdf_js_version}/${pdf_js_dist_name}.zip"

+target_pdf_js_dir="$(pwd)/libs/ktem/ktem/assets/prebuilt/${pdf_js_dist_name}"

+

check_path_for_spaces

print_highlight "Setting up Miniconda"

@@ -179,6 +210,9 @@ activate_conda_env

print_highlight "Installing requirements"

install_dependencies

+print_highlight "Downloading and unzipping PDF.js"

+download_and_unzip $pdf_js_dist_url $target_pdf_js_dir

+

print_highlight "Setting up a local model"

setup_local_model

diff --git a/scripts/run_macos.sh b/scripts/run_macos.sh

index 011818f..5ba335b 100755

--- a/scripts/run_macos.sh

+++ b/scripts/run_macos.sh

@@ -144,6 +144,32 @@ function setup_local_model() {

python $(pwd)/scripts/serve_local.py

}

+function download_and_unzip() {

+ local url=$1

+ local dest_dir=$2

+

+ # Check if the destination directory exists, create if it doesn't

+ if [ -d "$dest_dir" ]; then

+ echo "Destination directory $dest_dir already exists. Skipping download."

+ return

+ fi

+

+ mkdir -p "$dest_dir"

+

+ # Download the ZIP file

+ local zip_file="${dest_dir}/downloaded.zip"

+ echo "Downloading $url to $zip_file"

+ curl -L -o "$zip_file" "$url"

+

+ # Unzip the file to the destination directory

+ echo "Unzipping $zip_file to $dest_dir"

+ unzip -o "$zip_file" -d "$dest_dir"

+

+ # Clean up the downloaded ZIP file

+ rm "$zip_file"

+ echo "Download and unzip completed successfully."

+}

+

function launch_ui() {

python $(pwd)/app.py || {

echo "" && echo "Will exit now..."

@@ -171,6 +197,11 @@ conda_root="${install_dir}/conda"

env_dir="${install_dir}/env"

python_version="3.10"

+pdf_js_version="4.0.379"

+pdf_js_dist_name="pdfjs-${pdf_js_version}-dist"

+pdf_js_dist_url="https://github.com/mozilla/pdf.js/releases/download/v${pdf_js_version}/${pdf_js_dist_name}.zip"

+target_pdf_js_dir="$(pwd)/libs/ktem/ktem/assets/prebuilt/${pdf_js_dist_name}"

+

check_path_for_spaces

print_highlight "Setting up Miniconda"

@@ -183,6 +214,9 @@ activate_conda_env

print_highlight "Installing requirements"

install_dependencies

+print_highlight "Downloading and unzipping PDF.js"

+download_and_unzip $pdf_js_dist_url $target_pdf_js_dir

+

print_highlight "Setting up a local model"

setup_local_model

- Start the web server:

@@ -128,6 +128,10 @@ Default username / password are: `admin` / `admin`. You can setup additional use

+## Setup local models (for local / private RAG)

+

+See [Local model setup](docs/local_model.md).

+

## Customize your application

By default, all application data are stored in `./ktem_app_data` folder. You can backup or copy this folder to move your installation to a new machine.

@@ -225,7 +229,7 @@ ollama pull nomic-embed-text

Set the model names on web UI and make it as default.

-

+

##### Using GGUF with llama-cpp-python

diff --git a/doc_env_reqs.txt b/doc_env_reqs.txt

index 342522a..48a321f 100644

--- a/doc_env_reqs.txt

+++ b/doc_env_reqs.txt

@@ -3,6 +3,7 @@ mkdocstrings[python]

mkdocs-material

mkdocs-gen-files

mkdocs-literate-nav

+mkdocs-video

mkdocs-git-revision-date-localized-plugin

mkdocs-section-index

mdx_truly_sane_lists

diff --git a/docs/images/index-embedding.png b/docs/images/index-embedding.png

new file mode 100644

index 0000000..13d14e1

Binary files /dev/null and b/docs/images/index-embedding.png differ

diff --git a/docs/images/llm-default.png b/docs/images/llm-default.png

new file mode 100644

index 0000000..600961c

Binary files /dev/null and b/docs/images/llm-default.png differ

diff --git a/docs/images/retrieval-setting.png b/docs/images/retrieval-setting.png

new file mode 100644

index 0000000..6ff9eae

Binary files /dev/null and b/docs/images/retrieval-setting.png differ

diff --git a/docs/local_model.md b/docs/local_model.md

new file mode 100644

index 0000000..ed5c238

--- /dev/null

+++ b/docs/local_model.md

@@ -0,0 +1,90 @@

+# Setup local LLMs & Embedding models

+

+## Prepare local models

+

+#### NOTE

+

+In the case of using Docker image, please replace `http://localhost` with `http://host.docker.internal` to correctly communicate with service on the host machine. See [more detail](https://stackoverflow.com/questions/31324981/how-to-access-host-port-from-docker-container).

+

+### Ollama OpenAI compatible server (recommended)

+

+Install [ollama](https://github.com/ollama/ollama) and start the application.

+

+Pull your model (e.g):

+

+```

+ollama pull llama3.1:8b

+ollama pull nomic-embed-text

+```

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to Ollama:

+

+```

+api_key: ollama

+base_url: http://localhost:11434/v1/

+model: gemma2:2b (for llm) | nomic-embed-text (for embedding)

+```

+

+

+

+### oobabooga/text-generation-webui OpenAI compatible server

+

+Install [oobabooga/text-generation-webui](https://github.com/oobabooga/text-generation-webui/).

+

+Follow the setup guide to download your models (GGUF, HF).

+Also take a look at [OpenAI compatible server](https://github.com/oobabooga/text-generation-webui/wiki/12-%E2%80%90-OpenAI-API) for detail instructions.

+

+Here is a short version

+

+```

+# install sentence-transformer for embeddings creation

+pip install sentence_transformers

+# change to text-generation-webui src dir

+python server.py --api

+```

+

+Use the `Models` tab to download new model and press Load.

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to `text-generation-webui`:

+

+```

+api_key: dummy

+base_url: http://localhost:5000/v1/

+model: any

+```

+

+### llama-cpp-python server (LLM only)

+

+See [llama-cpp-python OpenAI server](https://llama-cpp-python.readthedocs.io/en/latest/server/).

+

+Download any GGUF model weight on HuggingFace or other source. Place it somewhere on your local machine.

+

+Run

+

+```

+LOCAL_MODEL= python scripts/serve_local.py

+```

+

+Setup LLM model on Resources tab with type OpenAI. Set these model parameters to connect to `llama-cpp-python`:

+

+```

+api_key: dummy

+base_url: http://localhost:8000/v1/

+model: model_name

+```

+

+## Use local models for RAG

+

+- Set default LLM and Embedding model to a local variant.

+

+

+

+- Set embedding model for the File Collection to a local model (e.g: `ollama`)

+

+

+

+- Go to Retrieval settings and choose LLM relevant scoring model as a local model (e.g: `ollama`). Or, you can choose to disable this feature if your machine cannot handle a lot of parallel LLM requests at the same time.

+

+

+

+You are set! Start a new conversation to test your local RAG pipeline.

diff --git a/flowsettings.py b/flowsettings.py

index aa3489c..8eaba8b 100644

--- a/flowsettings.py

+++ b/flowsettings.py

@@ -152,6 +152,7 @@ if config("LOCAL_MODEL", default=""):

"__type__": "kotaemon.llms.ChatOpenAI",

"base_url": "http://localhost:11434/v1/",

"model": config("LOCAL_MODEL", default="llama3.1:8b"),

+ "api_key": "ollama",

},

"default": False,

}

@@ -160,6 +161,7 @@ if config("LOCAL_MODEL", default=""):

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

"base_url": "http://localhost:11434/v1/",

"model": config("LOCAL_MODEL_EMBEDDINGS", default="nomic-embed-text"),

+ "api_key": "ollama",

},

"default": False,

}

diff --git a/scripts/run_linux.sh b/scripts/run_linux.sh

index 8eff787..ec2f960 100755

--- a/scripts/run_linux.sh

+++ b/scripts/run_linux.sh

@@ -74,7 +74,7 @@ function activate_conda_env() {

source "$conda_root/etc/profile.d/conda.sh" # conda init

conda activate "$env_dir" || {

- echo "Failed to activate environment. Please remove $env_dir and run the installer again"

+ echo "Failed to activate environment. Please remove $env_dir and run the installer again."

exit 1

}

echo "Activate conda environment at $CONDA_PREFIX"

@@ -143,6 +143,32 @@ function setup_local_model() {

python $(pwd)/scripts/serve_local.py

}

+function download_and_unzip() {

+ local url=$1

+ local dest_dir=$2

+

+ # Check if the destination directory exists, create if it doesn't

+ if [ -d "$dest_dir" ]; then

+ echo "Destination directory $dest_dir already exists. Skipping download."

+ return

+ fi

+

+ mkdir -p "$dest_dir"

+

+ # Download the ZIP file

+ local zip_file="${dest_dir}/downloaded.zip"

+ echo "Downloading $url to $zip_file"

+ curl -L -o "$zip_file" "$url"

+

+ # Unzip the file to the destination directory

+ echo "Unzipping $zip_file to $dest_dir"

+ unzip -o "$zip_file" -d "$dest_dir"

+

+ # Clean up the downloaded ZIP file

+ rm "$zip_file"

+ echo "Download and unzip completed successfully."

+}

+

function launch_ui() {

python $(pwd)/app.py || {

echo "" && echo "Will exit now..."

@@ -167,6 +193,11 @@ conda_root="${install_dir}/conda"

env_dir="${install_dir}/env"

python_version="3.10"

+pdf_js_version="4.0.379"

+pdf_js_dist_name="pdfjs-${pdf_js_version}-dist"

+pdf_js_dist_url="https://github.com/mozilla/pdf.js/releases/download/v${pdf_js_version}/${pdf_js_dist_name}.zip"

+target_pdf_js_dir="$(pwd)/libs/ktem/ktem/assets/prebuilt/${pdf_js_dist_name}"

+

check_path_for_spaces

print_highlight "Setting up Miniconda"

@@ -179,6 +210,9 @@ activate_conda_env

print_highlight "Installing requirements"

install_dependencies

+print_highlight "Downloading and unzipping PDF.js"

+download_and_unzip $pdf_js_dist_url $target_pdf_js_dir

+

print_highlight "Setting up a local model"

setup_local_model

diff --git a/scripts/run_macos.sh b/scripts/run_macos.sh

index 011818f..5ba335b 100755

--- a/scripts/run_macos.sh

+++ b/scripts/run_macos.sh

@@ -144,6 +144,32 @@ function setup_local_model() {

python $(pwd)/scripts/serve_local.py

}

+function download_and_unzip() {

+ local url=$1

+ local dest_dir=$2

+

+ # Check if the destination directory exists, create if it doesn't

+ if [ -d "$dest_dir" ]; then

+ echo "Destination directory $dest_dir already exists. Skipping download."

+ return

+ fi

+

+ mkdir -p "$dest_dir"

+

+ # Download the ZIP file

+ local zip_file="${dest_dir}/downloaded.zip"

+ echo "Downloading $url to $zip_file"

+ curl -L -o "$zip_file" "$url"

+

+ # Unzip the file to the destination directory

+ echo "Unzipping $zip_file to $dest_dir"

+ unzip -o "$zip_file" -d "$dest_dir"

+

+ # Clean up the downloaded ZIP file

+ rm "$zip_file"

+ echo "Download and unzip completed successfully."

+}

+

function launch_ui() {

python $(pwd)/app.py || {

echo "" && echo "Will exit now..."

@@ -171,6 +197,11 @@ conda_root="${install_dir}/conda"

env_dir="${install_dir}/env"

python_version="3.10"

+pdf_js_version="4.0.379"

+pdf_js_dist_name="pdfjs-${pdf_js_version}-dist"

+pdf_js_dist_url="https://github.com/mozilla/pdf.js/releases/download/v${pdf_js_version}/${pdf_js_dist_name}.zip"

+target_pdf_js_dir="$(pwd)/libs/ktem/ktem/assets/prebuilt/${pdf_js_dist_name}"

+

check_path_for_spaces

print_highlight "Setting up Miniconda"

@@ -183,6 +214,9 @@ activate_conda_env

print_highlight "Installing requirements"

install_dependencies

+print_highlight "Downloading and unzipping PDF.js"

+download_and_unzip $pdf_js_dist_url $target_pdf_js_dir

+

print_highlight "Setting up a local model"

setup_local_model

+

+ - Start the web server:

@@ -128,6 +128,10 @@ Default username / password are: `admin` / `admin`. You can setup additional use

+## Setup local models (for local / private RAG)

+

+See [Local model setup](docs/local_model.md).

+

## Customize your application

By default, all application data are stored in `./ktem_app_data` folder. You can backup or copy this folder to move your installation to a new machine.

@@ -225,7 +229,7 @@ ollama pull nomic-embed-text

Set the model names on web UI and make it as default.

-

+

##### Using GGUF with llama-cpp-python

diff --git a/doc_env_reqs.txt b/doc_env_reqs.txt

index 342522a..48a321f 100644

--- a/doc_env_reqs.txt

+++ b/doc_env_reqs.txt

@@ -3,6 +3,7 @@ mkdocstrings[python]

mkdocs-material

mkdocs-gen-files

mkdocs-literate-nav

+mkdocs-video

mkdocs-git-revision-date-localized-plugin

mkdocs-section-index

mdx_truly_sane_lists

diff --git a/docs/images/index-embedding.png b/docs/images/index-embedding.png

new file mode 100644

index 0000000..13d14e1

Binary files /dev/null and b/docs/images/index-embedding.png differ

diff --git a/docs/images/llm-default.png b/docs/images/llm-default.png

new file mode 100644

index 0000000..600961c

Binary files /dev/null and b/docs/images/llm-default.png differ

diff --git a/docs/images/retrieval-setting.png b/docs/images/retrieval-setting.png

new file mode 100644

index 0000000..6ff9eae

Binary files /dev/null and b/docs/images/retrieval-setting.png differ

diff --git a/docs/local_model.md b/docs/local_model.md

new file mode 100644

index 0000000..ed5c238

--- /dev/null

+++ b/docs/local_model.md

@@ -0,0 +1,90 @@

+# Setup local LLMs & Embedding models

+

+## Prepare local models

+

+#### NOTE

+

+In the case of using Docker image, please replace `http://localhost` with `http://host.docker.internal` to correctly communicate with service on the host machine. See [more detail](https://stackoverflow.com/questions/31324981/how-to-access-host-port-from-docker-container).

+

+### Ollama OpenAI compatible server (recommended)

+

+Install [ollama](https://github.com/ollama/ollama) and start the application.

+

+Pull your model (e.g):

+

+```

+ollama pull llama3.1:8b

+ollama pull nomic-embed-text

+```

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to Ollama:

+

+```

+api_key: ollama

+base_url: http://localhost:11434/v1/

+model: gemma2:2b (for llm) | nomic-embed-text (for embedding)

+```

+

+

+

+### oobabooga/text-generation-webui OpenAI compatible server

+

+Install [oobabooga/text-generation-webui](https://github.com/oobabooga/text-generation-webui/).

+

+Follow the setup guide to download your models (GGUF, HF).

+Also take a look at [OpenAI compatible server](https://github.com/oobabooga/text-generation-webui/wiki/12-%E2%80%90-OpenAI-API) for detail instructions.

+

+Here is a short version

+

+```

+# install sentence-transformer for embeddings creation

+pip install sentence_transformers

+# change to text-generation-webui src dir

+python server.py --api

+```

+

+Use the `Models` tab to download new model and press Load.

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to `text-generation-webui`:

+

+```

+api_key: dummy

+base_url: http://localhost:5000/v1/

+model: any

+```

+

+### llama-cpp-python server (LLM only)

+

+See [llama-cpp-python OpenAI server](https://llama-cpp-python.readthedocs.io/en/latest/server/).

+

+Download any GGUF model weight on HuggingFace or other source. Place it somewhere on your local machine.

+

+Run

+

+```

+LOCAL_MODEL=

- Start the web server:

@@ -128,6 +128,10 @@ Default username / password are: `admin` / `admin`. You can setup additional use

+## Setup local models (for local / private RAG)

+

+See [Local model setup](docs/local_model.md).

+

## Customize your application

By default, all application data are stored in `./ktem_app_data` folder. You can backup or copy this folder to move your installation to a new machine.

@@ -225,7 +229,7 @@ ollama pull nomic-embed-text

Set the model names on web UI and make it as default.

-

+

##### Using GGUF with llama-cpp-python

diff --git a/doc_env_reqs.txt b/doc_env_reqs.txt

index 342522a..48a321f 100644

--- a/doc_env_reqs.txt

+++ b/doc_env_reqs.txt

@@ -3,6 +3,7 @@ mkdocstrings[python]

mkdocs-material

mkdocs-gen-files

mkdocs-literate-nav

+mkdocs-video

mkdocs-git-revision-date-localized-plugin

mkdocs-section-index

mdx_truly_sane_lists

diff --git a/docs/images/index-embedding.png b/docs/images/index-embedding.png

new file mode 100644

index 0000000..13d14e1

Binary files /dev/null and b/docs/images/index-embedding.png differ

diff --git a/docs/images/llm-default.png b/docs/images/llm-default.png

new file mode 100644

index 0000000..600961c

Binary files /dev/null and b/docs/images/llm-default.png differ

diff --git a/docs/images/retrieval-setting.png b/docs/images/retrieval-setting.png

new file mode 100644

index 0000000..6ff9eae

Binary files /dev/null and b/docs/images/retrieval-setting.png differ

diff --git a/docs/local_model.md b/docs/local_model.md

new file mode 100644

index 0000000..ed5c238

--- /dev/null

+++ b/docs/local_model.md

@@ -0,0 +1,90 @@

+# Setup local LLMs & Embedding models

+

+## Prepare local models

+

+#### NOTE

+

+In the case of using Docker image, please replace `http://localhost` with `http://host.docker.internal` to correctly communicate with service on the host machine. See [more detail](https://stackoverflow.com/questions/31324981/how-to-access-host-port-from-docker-container).

+

+### Ollama OpenAI compatible server (recommended)

+

+Install [ollama](https://github.com/ollama/ollama) and start the application.

+

+Pull your model (e.g):

+

+```

+ollama pull llama3.1:8b

+ollama pull nomic-embed-text

+```

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to Ollama:

+

+```

+api_key: ollama

+base_url: http://localhost:11434/v1/

+model: gemma2:2b (for llm) | nomic-embed-text (for embedding)

+```

+

+

+

+### oobabooga/text-generation-webui OpenAI compatible server

+

+Install [oobabooga/text-generation-webui](https://github.com/oobabooga/text-generation-webui/).

+

+Follow the setup guide to download your models (GGUF, HF).

+Also take a look at [OpenAI compatible server](https://github.com/oobabooga/text-generation-webui/wiki/12-%E2%80%90-OpenAI-API) for detail instructions.

+

+Here is a short version

+

+```

+# install sentence-transformer for embeddings creation

+pip install sentence_transformers

+# change to text-generation-webui src dir

+python server.py --api

+```

+

+Use the `Models` tab to download new model and press Load.

+

+Setup LLM and Embedding model on Resources tab with type OpenAI. Set these model parameters to connect to `text-generation-webui`:

+

+```

+api_key: dummy

+base_url: http://localhost:5000/v1/

+model: any

+```

+

+### llama-cpp-python server (LLM only)

+

+See [llama-cpp-python OpenAI server](https://llama-cpp-python.readthedocs.io/en/latest/server/).

+

+Download any GGUF model weight on HuggingFace or other source. Place it somewhere on your local machine.

+

+Run

+

+```

+LOCAL_MODEL=