Setup app data dir (#32)

* setup local data dir * update readme * update chat panel * update help page

This commit is contained in:

3

.gitignore

vendored

3

.gitignore

vendored

@@ -468,3 +468,6 @@ storage/*

|

||||

# Conda and env storages

|

||||

*install_dir/

|

||||

doc_env/

|

||||

|

||||

# application data

|

||||

ktem_app_data/

|

||||

|

||||

10

README.md

10

README.md

@@ -2,7 +2,12 @@

|

||||

|

||||

|

||||

|

||||

[User Guide](https://cinnamon.github.io/kotaemon/) | [Developer Guide](https://cinnamon.github.io/kotaemon/development/)

|

||||

[Source Code](https://github.com/Cinnamon/kotaemon) |

|

||||

[Demo](https://huggingface.co/spaces/lone17/kotaemon-app)

|

||||

|

||||

[User Guide](https://cinnamon.github.io/kotaemon/) |

|

||||

[Developer Guide](https://cinnamon.github.io/kotaemon/development/) |

|

||||

[Feedback](https://github.com/Cinnamon/kotaemon/issues)

|

||||

|

||||

[](https://www.python.org/downloads/release/python-31013/)

|

||||

[](https://github.com/psf/black)

|

||||

@@ -68,3 +73,6 @@ open source.

|

||||

```shell

|

||||

pytest tests

|

||||

```

|

||||

|

||||

Please refer to the [Developer Guide](https://cinnamon.github.io/kotaemon/development/)

|

||||

for more details.

|

||||

|

||||

@@ -1,14 +1,30 @@

|

||||

import os

|

||||

from inspect import currentframe, getframeinfo

|

||||

from pathlib import Path

|

||||

|

||||

from decouple import config

|

||||

from platformdirs import user_cache_dir

|

||||

from theflow.settings.default import * # noqa

|

||||

|

||||

user_cache_dir = Path(

|

||||

user_cache_dir(str(config("KH_APP_NAME", default="ktem")), "Cinnamon")

|

||||

)

|

||||

user_cache_dir.mkdir(parents=True, exist_ok=True)

|

||||

cur_frame = currentframe()

|

||||

if cur_frame is None:

|

||||

raise ValueError("Cannot get the current frame.")

|

||||

this_file = getframeinfo(cur_frame).filename

|

||||

this_dir = Path(this_file).parent

|

||||

|

||||

# App can be ran from anywhere and it's not trivial to decide where to store app data.

|

||||

# So let's use the same directory as the flowsetting.py file.

|

||||

KH_APP_DATA_DIR = this_dir / "ktem_app_data"

|

||||

KH_APP_DATA_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# User data directory

|

||||

KH_USER_DATA_DIR = KH_APP_DATA_DIR / "user_data"

|

||||

KH_USER_DATA_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# HF models can be big, let's store them in the app data directory so that it's easier

|

||||

# for users to manage their storage.

|

||||

# ref: https://huggingface.co/docs/huggingface_hub/en/guides/manage-cache

|

||||

os.environ["HF_HOME"] = str(KH_APP_DATA_DIR / "huggingface")

|

||||

os.environ["HF_HUB_CACHE"] = str(KH_APP_DATA_DIR / "huggingface")

|

||||

|

||||

COHERE_API_KEY = config("COHERE_API_KEY", default="")

|

||||

KH_MODE = "dev"

|

||||

@@ -20,16 +36,16 @@ KH_FEATURE_USER_MANAGEMENT_PASSWORD = str(

|

||||

config("KH_FEATURE_USER_MANAGEMENT_PASSWORD", default="XsdMbe8zKP8KdeE@")

|

||||

)

|

||||

KH_ENABLE_ALEMBIC = False

|

||||

KH_DATABASE = f"sqlite:///{user_cache_dir / 'sql.db'}"

|

||||

KH_FILESTORAGE_PATH = str(user_cache_dir / "files")

|

||||

KH_DATABASE = f"sqlite:///{KH_USER_DATA_DIR / 'sql.db'}"

|

||||

KH_FILESTORAGE_PATH = str(KH_USER_DATA_DIR / "files")

|

||||

|

||||

KH_DOCSTORE = {

|

||||

"__type__": "kotaemon.storages.SimpleFileDocumentStore",

|

||||

"path": str(user_cache_dir / "docstore"),

|

||||

"path": str(KH_USER_DATA_DIR / "docstore"),

|

||||

}

|

||||

KH_VECTORSTORE = {

|

||||

"__type__": "kotaemon.storages.ChromaVectorStore",

|

||||

"path": str(user_cache_dir / "vectorstore"),

|

||||

"path": str(KH_USER_DATA_DIR / "vectorstore"),

|

||||

}

|

||||

KH_LLMS = {}

|

||||

KH_EMBEDDINGS = {}

|

||||

|

||||

@@ -77,7 +77,8 @@ button.selected {

|

||||

}

|

||||

|

||||

#main-chat-bot {

|

||||

flex: 1;

|

||||

background: var(--background-fill-primary);

|

||||

flex: auto;

|

||||

}

|

||||

|

||||

#chat-area {

|

||||

|

||||

10

libs/ktem/ktem/assets/md/about.md

Normal file

10

libs/ktem/ktem/assets/md/about.md

Normal file

@@ -0,0 +1,10 @@

|

||||

# About Kotaemon

|

||||

|

||||

An open-source tool for you to chat with your documents.

|

||||

|

||||

[Source Code](https://github.com/Cinnamon/kotaemon) |

|

||||

[Demo](https://huggingface.co/spaces/lone17/kotaemon-app)

|

||||

|

||||

[User Guide](https://cinnamon.github.io/kotaemon/) |

|

||||

[Developer Guide](https://cinnamon.github.io/kotaemon/development/) |

|

||||

[Feedback](https://github.com/Cinnamon/kotaemon/issues)

|

||||

@@ -1,25 +0,0 @@

|

||||

# About Cinnamon AI

|

||||

|

||||

Welcome to **Cinnamon AI**, a pioneering force in the field of artificial intelligence and document processing. At Cinnamon AI, we are committed to revolutionizing the way businesses handle information, leveraging cutting-edge technologies to streamline and automate data extraction processes.

|

||||

|

||||

## Our Mission

|

||||

|

||||

At the core of our mission is the pursuit of innovation that simplifies complex tasks. We strive to empower organizations with transformative AI solutions that enhance efficiency, accuracy, and productivity. Cinnamon AI is dedicated to bridging the gap between human intelligence and machine capabilities, making data extraction and analysis seamless and intuitive.

|

||||

|

||||

## Key Highlights

|

||||

|

||||

- **Advanced Technology:** Cinnamon AI specializes in harnessing the power of natural language processing (NLP) and machine learning to develop sophisticated solutions for document understanding and data extraction.

|

||||

|

||||

- **Industry Impact:** We cater to diverse industries, providing tailor-made AI solutions that address the unique challenges and opportunities within each sector. From finance to healthcare, our technology is designed to make a meaningful impact.

|

||||

|

||||

- **Global Presence:** With a global perspective, Cinnamon AI operates on an international scale, collaborating with businesses and enterprises around the world to elevate their data processing capabilities.

|

||||

|

||||

## Why Choose Cinnamon AI

|

||||

|

||||

- **Innovation:** Our commitment to innovation is evident in our continual pursuit of technological excellence. We stay ahead of the curve to deliver solutions that meet the evolving needs of the digital landscape.

|

||||

|

||||

- **Reliability:** Clients trust Cinnamon AI for reliable, accurate, and scalable AI solutions. Our track record speaks to our dedication to quality and customer satisfaction.

|

||||

|

||||

- **Collaboration:** We believe in the power of collaboration. By working closely with our clients, we tailor our solutions to their specific requirements, fostering long-term partnerships built on mutual success.

|

||||

|

||||

Explore the future of data processing with Cinnamon AI – where intelligence meets innovation.

|

||||

@@ -1,19 +0,0 @@

|

||||

# About Kotaemon

|

||||

|

||||

Welcome to the future of language technology – Cinnamon AI proudly presents our latest innovation, **Kotaemon**. At Cinnamon AI, we believe in pushing the boundaries of what's possible with natural language processing, and Kotaemon embodies the pinnacle of our endeavors. Designed to empower businesses and developers alike, Kotaemon is not just a product; it's a manifestation of our commitment to enhancing human-machine interaction.

|

||||

|

||||

## Key Features

|

||||

|

||||

- **Cognitive Understanding:** Kotaemon boasts advanced cognitive understanding capabilities, allowing it to interpret and respond to natural language queries with unprecedented accuracy. Whether you're building chatbots, virtual assistants, or language-driven applications, Kotaemon ensures a nuanced and contextually rich user experience.

|

||||

|

||||

- **Versatility:** From analyzing vast textual datasets to generating coherent and contextually relevant responses, Kotaemon adapts seamlessly to diverse use cases. Whether you're in customer support, content creation, or data analysis, Kotaemon is your versatile companion in navigating the linguistic landscape.

|

||||

|

||||

- **Scalability:** Built with scalability in mind, Kotaemon is designed to meet the evolving needs of your business. As your language-related tasks grow in complexity, Kotaemon scales with you, providing a robust foundation for future innovation and expansion.

|

||||

|

||||

- **Ethical AI:** Cinnamon AI is committed to responsible and ethical AI development. Kotaemon reflects our dedication to fairness, transparency, and unbiased language processing, ensuring that your applications uphold the highest ethical standards.

|

||||

|

||||

## Why Kotaemon?

|

||||

|

||||

Kotaemon is not just a tool; it's a catalyst for unlocking the true potential of natural language understanding. Whether you're a developer aiming to enhance user experiences or a business leader seeking to leverage language technology, Kotaemon is your partner in navigating the intricacies of human communication.

|

||||

|

||||

Join us on this transformative journey with Kotaemon – where language meets innovation, and understanding becomes seamless. Cinnamon AI: Redefining the future of natural language processing.

|

||||

@@ -1,6 +1,6 @@

|

||||

# Changelogs

|

||||

|

||||

## v1.0.0

|

||||

## v0.0.1

|

||||

|

||||

- Chat: interact with chatbot with simple pipeline, rewoo and react agents

|

||||

- Chat: conversation management: create, delete, rename conversations

|

||||

|

||||

138

libs/ktem/ktem/assets/md/usage.md

Normal file

138

libs/ktem/ktem/assets/md/usage.md

Normal file

@@ -0,0 +1,138 @@

|

||||

# Basic Usage

|

||||

|

||||

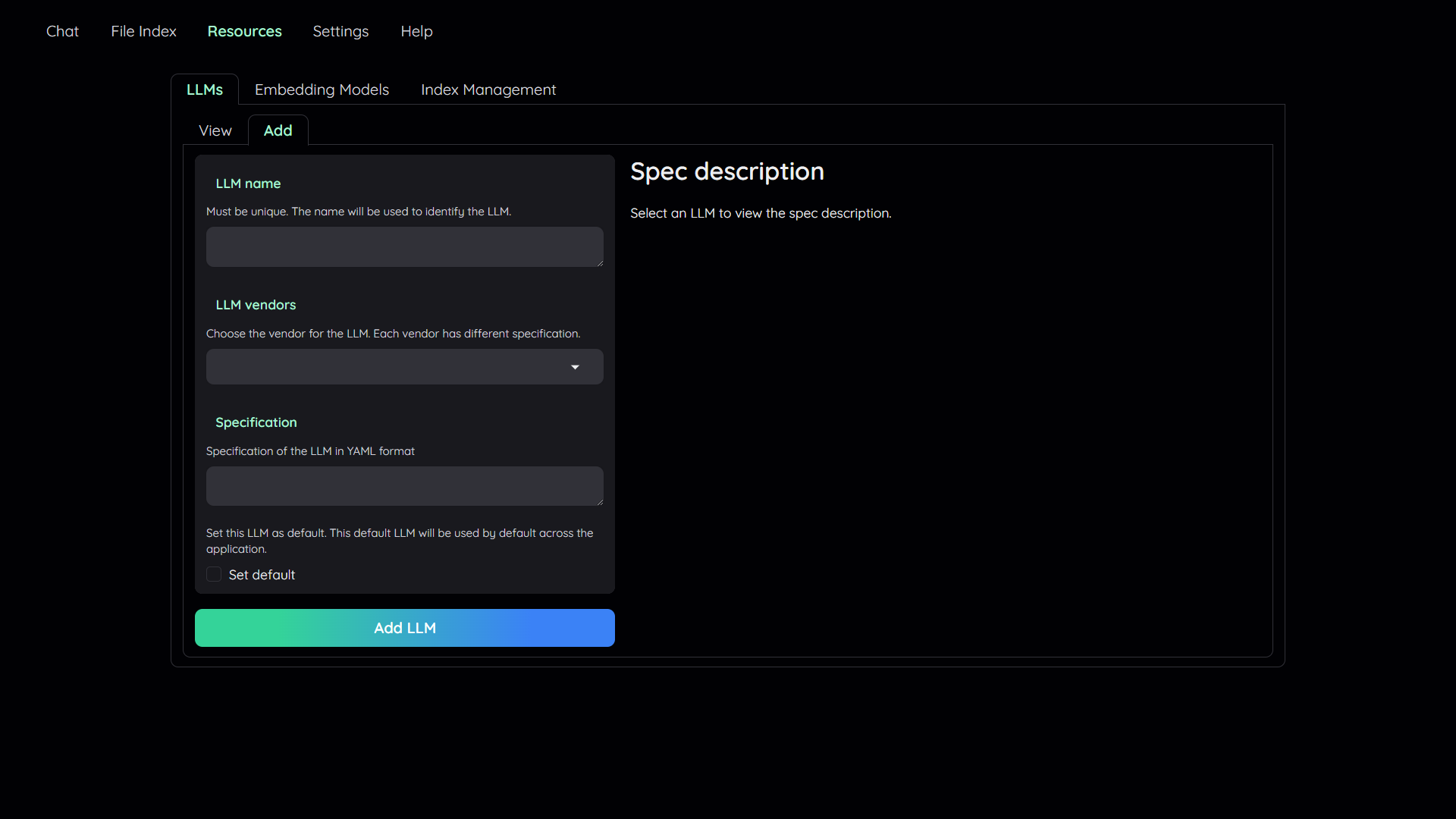

## 1. Add your AI models

|

||||

|

||||

|

||||

|

||||

- The tool uses Large Language Model (LLMs) to perform various tasks in a QA pipeline.

|

||||

So, you need to provide the application with access to the LLMs you want

|

||||

to use.

|

||||

- You only need to provide at least one. However, tt is recommended that you include all the LLMs

|

||||

that you have access to, you will be able to switch between them while using the

|

||||

application.

|

||||

|

||||

To add a model:

|

||||

|

||||

1. Navigate to the `Resources` tab.

|

||||

2. Select the `LLMs` sub-tab.

|

||||

3. Select the `Add` sub-tab.

|

||||

4. Config the model to add:

|

||||

- Give it a name.

|

||||

- Pick a vendor/provider (e.g. `ChatOpenAI`).

|

||||

- Provide the specifications.

|

||||

- (Optional) Set the model as default.

|

||||

5. Click `Add` to add the model.

|

||||

6. Select `Embedding Models` sub-tab and repeat the step 3 to 5 to add an embedding model.

|

||||

|

||||

<details markdown>

|

||||

|

||||

<summary>(Optional) Configure model via the .env file</summary>

|

||||

|

||||

Alternatively, you can configure the models via the `.env` file with the information needed to connect to the LLMs. This file is located in

|

||||

the folder of the application. If you don't see it, you can create one.

|

||||

|

||||

Currently, the following providers are supported:

|

||||

|

||||

### OpenAI

|

||||

|

||||

In the `.env` file, set the `OPENAI_API_KEY` variable with your OpenAI API key in order

|

||||

to enable access to OpenAI's models. There are other variables that can be modified,

|

||||

please feel free to edit them to fit your case. Otherwise, the default parameter should

|

||||

work for most people.

|

||||

|

||||

```shell

|

||||

OPENAI_API_BASE=https://api.openai.com/v1

|

||||

OPENAI_API_KEY=<your OpenAI API key here>

|

||||

OPENAI_CHAT_MODEL=gpt-3.5-turbo

|

||||

OPENAI_EMBEDDINGS_MODEL=text-embedding-ada-002

|

||||

```

|

||||

|

||||

### Azure OpenAI

|

||||

|

||||

For OpenAI models via Azure platform, you need to provide your Azure endpoint and API

|

||||

key. Your might also need to provide your developments' name for the chat model and the

|

||||

embedding model depending on how you set up Azure development.

|

||||

|

||||

```shell

|

||||

AZURE_OPENAI_ENDPOINT=

|

||||

AZURE_OPENAI_API_KEY=

|

||||

OPENAI_API_VERSION=2024-02-15-preview

|

||||

AZURE_OPENAI_CHAT_DEPLOYMENT=gpt-35-turbo

|

||||

AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT=text-embedding-ada-002

|

||||

```

|

||||

|

||||

### Local models

|

||||

|

||||

- Pros:

|

||||

- Privacy. Your documents will be stored and process locally.

|

||||

- Choices. There are a wide range of LLMs in terms of size, domain, language to choose

|

||||

from.

|

||||

- Cost. It's free.

|

||||

- Cons:

|

||||

- Quality. Local models are much smaller and thus have lower generative quality than

|

||||

paid APIs.

|

||||

- Speed. Local models are deployed using your machine so the processing speed is

|

||||

limited by your hardware.

|

||||

|

||||

#### Find and download a LLM

|

||||

|

||||

You can search and download a LLM to be ran locally from the [Hugging Face

|

||||

Hub](https://huggingface.co/models). Currently, these model formats are supported:

|

||||

|

||||

- GGUF

|

||||

|

||||

You should choose a model whose size is less than your device's memory and should leave

|

||||

about 2 GB. For example, if you have 16 GB of RAM in total, of which 12 GB is available,

|

||||

then you should choose a model that take up at most 10 GB of RAM. Bigger models tend to

|

||||

give better generation but also take more processing time.

|

||||

|

||||

Here are some recommendations and their size in memory:

|

||||

|

||||

- [Qwen1.5-1.8B-Chat-GGUF](https://huggingface.co/Qwen/Qwen1.5-1.8B-Chat-GGUF/resolve/main/qwen1_5-1_8b-chat-q8_0.gguf?download=true):

|

||||

around 2 GB

|

||||

|

||||

#### Enable local models

|

||||

|

||||

To add a local model to the model pool, set the `LOCAL_MODEL` variable in the `.env`

|

||||

file to the path of the model file.

|

||||

|

||||

```shell

|

||||

LOCAL_MODEL=<full path to your model file>

|

||||

```

|

||||

|

||||

Here is how to get the full path of your model file:

|

||||

|

||||

- On Windows 11: right click the file and select `Copy as Path`.

|

||||

</details>

|

||||

|

||||

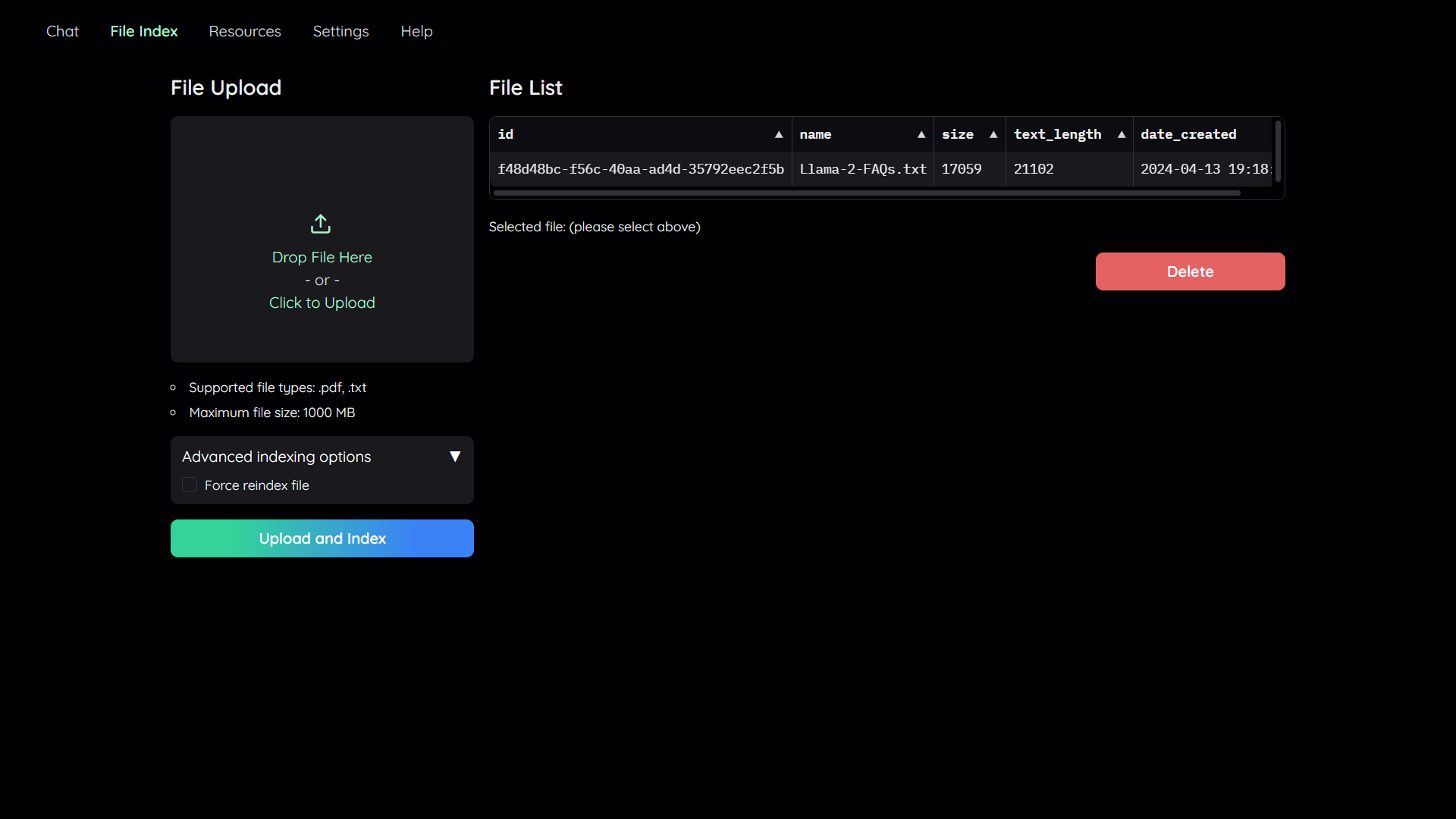

## 2. Upload your documents

|

||||

|

||||

|

||||

|

||||

In order to do QA on your documents, you need to upload them to the application first.

|

||||

Navigate to the `File Index` tab and you will see 2 sections:

|

||||

|

||||

1. File upload:

|

||||

- Drag and drop your file to the UI or select it from your file system.

|

||||

Then click `Upload and Index`.

|

||||

- The application will take some time to process the file and show a message once it is done.

|

||||

2. File list:

|

||||

- This section shows the list of files that have been uploaded to the application and allows users to delete them.

|

||||

|

||||

## 3. Chat with your documents

|

||||

|

||||

|

||||

|

||||

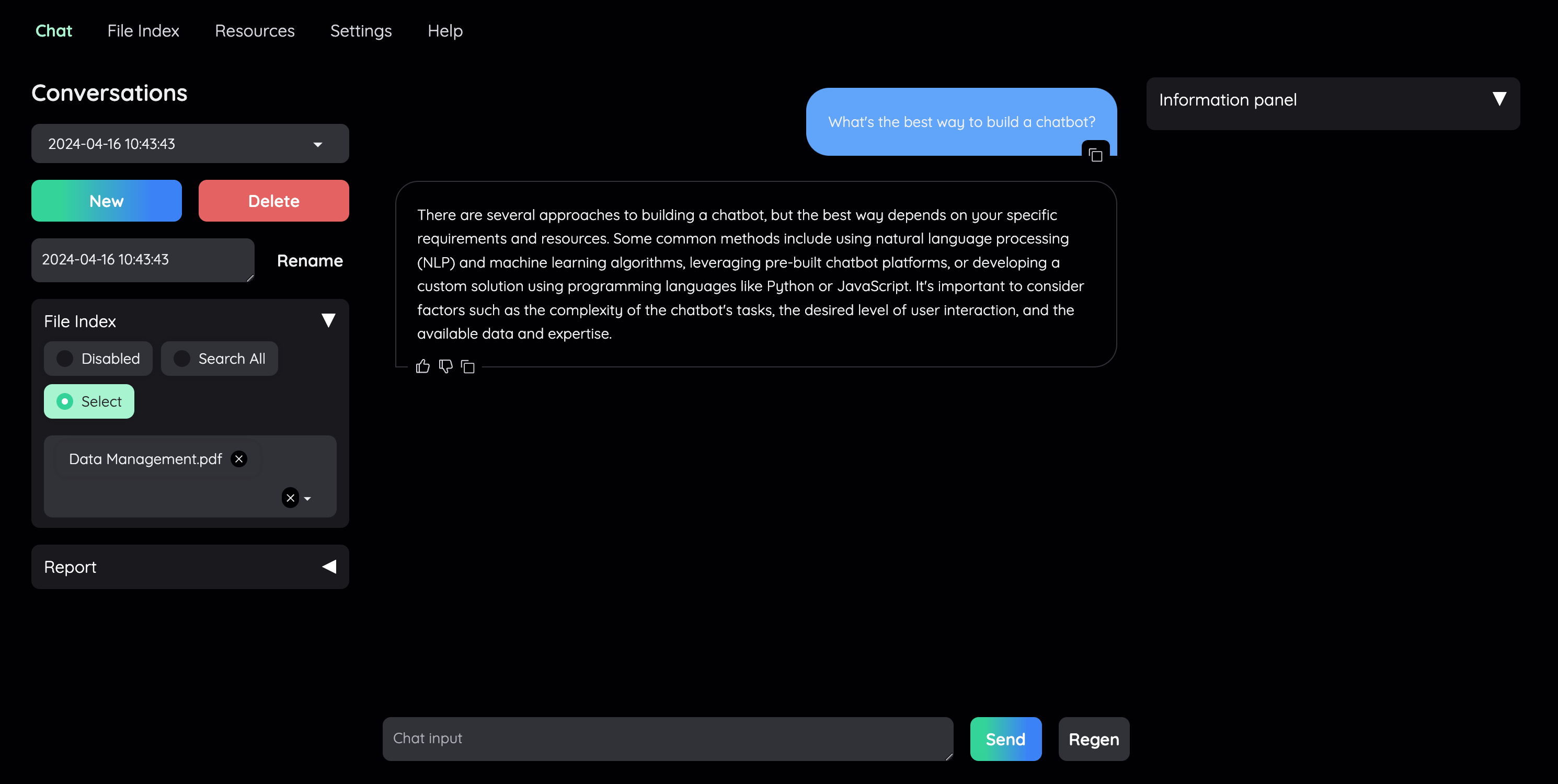

Now navigate back to the `Chat` tab. The chat tab is divided into 3 regions:

|

||||

|

||||

1. Conversation Settings Panel

|

||||

- Here you can select, create, rename, and delete conversations.

|

||||

- By default, a new conversation is created automatically if no conversation is selected.

|

||||

- Below that you have the file index, where you can select which files to retrieve references from.

|

||||

- These are the files you have uploaded to the application from the `File Index` tab.

|

||||

- If no file is selected, all files will be used.

|

||||

2. Chat Panel

|

||||

- This is where you can chat with the chatbot.

|

||||

3. Information panel

|

||||

- Supporting information such as the retrieved evidence and reference will be

|

||||

displayed here.

|

||||

@@ -10,8 +10,8 @@ class ChatPanel(BasePage):

|

||||

def on_building_ui(self):

|

||||

self.chatbot = gr.Chatbot(

|

||||

label="Kotaemon",

|

||||

# placeholder="This is the beginning of a new conversation.",

|

||||

show_label=True,

|

||||

placeholder="This is the beginning of a new conversation.",

|

||||

show_label=False,

|

||||

elem_id="main-chat-bot",

|

||||

show_copy_button=True,

|

||||

likeable=True,

|

||||

|

||||

@@ -6,9 +6,13 @@ import gradio as gr

|

||||

class HelpPage:

|

||||

def __init__(self, app):

|

||||

self._app = app

|

||||

self.dir_md = Path(__file__).parent.parent / "assets" / "md"

|

||||

self.md_dir = Path(__file__).parent.parent / "assets" / "md"

|

||||

self.doc_dir = Path(__file__).parents[4] / "docs"

|

||||

|

||||

with gr.Accordion("About"):

|

||||

with (self.md_dir / "about.md").open(encoding="utf-8") as fi:

|

||||

gr.Markdown(fi.read())

|

||||

|

||||

with gr.Accordion("User Guide"):

|

||||

with (self.doc_dir / "usage.md").open(encoding="utf-8") as fi:

|

||||

gr.Markdown(fi.read())

|

||||

@@ -17,5 +21,5 @@ class HelpPage:

|

||||

gr.Markdown(self.get_changelogs())

|

||||

|

||||

def get_changelogs(self):

|

||||

with (self.dir_md / "changelogs.md").open(encoding="utf-8") as fi:

|

||||

with (self.md_dir / "changelogs.md").open(encoding="utf-8") as fi:

|

||||

return fi.read()

|

||||

|

||||

Reference in New Issue

Block a user