feat: sso login, demo mode & new mindmap support (#644) bump:minor

* fix: update .env.example * feat: add SSO login * fix: update flowsetting * fix: add requirement * fix: refine UI * fix: update group id-based operation * fix: improve citation logics * fix: UI enhancement * fix: user_id to string in models * fix: improve chat suggestion UI and flow * fix: improve group id handling * fix: improve chat suggestion * fix: secure download for single file * fix: file limiting in docstore * fix: improve chat suggestion logics & language conform * feat: add markmap and select text to highlight function * fix: update Dockerfile * fix: user id auto generate * fix: default user id * feat: add demo mode * fix: update flowsetting * fix: revise default params for demo * feat: sso_app alternative * feat: sso login demo * feat: demo specific customization * feat: add login using API key * fix: disable key-based login * fix: optimize duplicate upload * fix: gradio routing * fix: disable arm build for demo * fix: revise full-text search js logic * feat: add rate limit * fix: update Dockerfile with new launch script * fix: update Dockerfile * fix: update Dockerignore * fix: update ratelimit logic * fix: user_id in user management page * fix: rename conv logic * feat: update demo hint * fix: minor fix * fix: highlight on long PDF load * feat: add HF paper list * fix: update HF papers load logic * feat: fly config * fix: update fly config * fix: update paper list pull api * fix: minor update root routing * fix: minor update root routing * fix: simplify login flow & paper list UI * feat: add paper recommendation * fix: update Dockerfile * fix: update Dockerfile * fix: update default model * feat: add long context Ollama through LCOllama * feat: espose Gradio share to env * fix: revert customized changes * fix: list group at app load * fix: relocate share conv button * fix: update launch script * fix: update Docker CI * feat: add Ollama model selection at first setup * docs: update README

This commit is contained in:

committed by

GitHub

GitHub

parent

3006402d7e

commit

3bd3830b8d

@@ -11,3 +11,5 @@ env/

|

|||||||

README.md

|

README.md

|

||||||

*.zip

|

*.zip

|

||||||

*.sh

|

*.sh

|

||||||

|

|

||||||

|

!/launch.sh

|

||||||

|

|||||||

@@ -3,8 +3,8 @@

|

|||||||

# settings for OpenAI

|

# settings for OpenAI

|

||||||

OPENAI_API_BASE=https://api.openai.com/v1

|

OPENAI_API_BASE=https://api.openai.com/v1

|

||||||

OPENAI_API_KEY=<YOUR_OPENAI_KEY>

|

OPENAI_API_KEY=<YOUR_OPENAI_KEY>

|

||||||

OPENAI_CHAT_MODEL=gpt-3.5-turbo

|

OPENAI_CHAT_MODEL=gpt-4o-mini

|

||||||

OPENAI_EMBEDDINGS_MODEL=text-embedding-ada-002

|

OPENAI_EMBEDDINGS_MODEL=text-embedding-3-large

|

||||||

|

|

||||||

# settings for Azure OpenAI

|

# settings for Azure OpenAI

|

||||||

AZURE_OPENAI_ENDPOINT=

|

AZURE_OPENAI_ENDPOINT=

|

||||||

@@ -17,10 +17,8 @@ AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT=text-embedding-ada-002

|

|||||||

COHERE_API_KEY=<COHERE_API_KEY>

|

COHERE_API_KEY=<COHERE_API_KEY>

|

||||||

|

|

||||||

# settings for local models

|

# settings for local models

|

||||||

LOCAL_MODEL=llama3.1:8b

|

LOCAL_MODEL=qwen2.5:7b

|

||||||

LOCAL_MODEL_EMBEDDINGS=nomic-embed-text

|

LOCAL_MODEL_EMBEDDINGS=nomic-embed-text

|

||||||

LOCAL_EMBEDDING_MODEL_DIM = 768

|

|

||||||

LOCAL_EMBEDDING_MODEL_MAX_TOKENS = 8192

|

|

||||||

|

|

||||||

# settings for GraphRAG

|

# settings for GraphRAG

|

||||||

GRAPHRAG_API_KEY=<YOUR_OPENAI_KEY>

|

GRAPHRAG_API_KEY=<YOUR_OPENAI_KEY>

|

||||||

|

|||||||

1

.github/workflows/build-push-docker.yaml

vendored

1

.github/workflows/build-push-docker.yaml

vendored

@@ -28,6 +28,7 @@ jobs:

|

|||||||

target:

|

target:

|

||||||

- lite

|

- lite

|

||||||

- full

|

- full

|

||||||

|

- ollama

|

||||||

steps:

|

steps:

|

||||||

- name: Free Disk Space (Ubuntu)

|

- name: Free Disk Space (Ubuntu)

|

||||||

uses: jlumbroso/free-disk-space@main

|

uses: jlumbroso/free-disk-space@main

|

||||||

|

|||||||

18

.github/workflows/fly-deploy.yml

vendored

Normal file

18

.github/workflows/fly-deploy.yml

vendored

Normal file

@@ -0,0 +1,18 @@

|

|||||||

|

# See https://fly.io/docs/app-guides/continuous-deployment-with-github-actions/

|

||||||

|

|

||||||

|

name: Fly Deploy

|

||||||

|

on:

|

||||||

|

push:

|

||||||

|

branches:

|

||||||

|

- main

|

||||||

|

jobs:

|

||||||

|

deploy:

|

||||||

|

name: Deploy app

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

concurrency: deploy-group # optional: ensure only one action runs at a time

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v4

|

||||||

|

- uses: superfly/flyctl-actions/setup-flyctl@master

|

||||||

|

- run: flyctl deploy --remote-only

|

||||||

|

env:

|

||||||

|

FLY_API_TOKEN: ${{ secrets.FLY_API_TOKEN }}

|

||||||

@@ -57,6 +57,7 @@ repos:

|

|||||||

"types-requests",

|

"types-requests",

|

||||||

"sqlmodel",

|

"sqlmodel",

|

||||||

"types-Markdown",

|

"types-Markdown",

|

||||||

|

"types-cachetools",

|

||||||

types-tzlocal,

|

types-tzlocal,

|

||||||

]

|

]

|

||||||

args: ["--check-untyped-defs", "--ignore-missing-imports"]

|

args: ["--check-untyped-defs", "--ignore-missing-imports"]

|

||||||

|

|||||||

19

Dockerfile

19

Dockerfile

@@ -35,6 +35,7 @@ RUN bash scripts/download_pdfjs.sh $PDFJS_PREBUILT_DIR

|

|||||||

|

|

||||||

# Copy contents

|

# Copy contents

|

||||||

COPY . /app

|

COPY . /app

|

||||||

|

COPY launch.sh /app/launch.sh

|

||||||

COPY .env.example /app/.env

|

COPY .env.example /app/.env

|

||||||

|

|

||||||

# Install pip packages

|

# Install pip packages

|

||||||

@@ -54,7 +55,7 @@ RUN apt-get autoremove \

|

|||||||

&& rm -rf /var/lib/apt/lists/* \

|

&& rm -rf /var/lib/apt/lists/* \

|

||||||

&& rm -rf ~/.cache

|

&& rm -rf ~/.cache

|

||||||

|

|

||||||

CMD ["python", "app.py"]

|

ENTRYPOINT ["sh", "/app/launch.sh"]

|

||||||

|

|

||||||

# Full version

|

# Full version

|

||||||

FROM lite AS full

|

FROM lite AS full

|

||||||

@@ -97,7 +98,17 @@ RUN apt-get autoremove \

|

|||||||

&& rm -rf /var/lib/apt/lists/* \

|

&& rm -rf /var/lib/apt/lists/* \

|

||||||

&& rm -rf ~/.cache

|

&& rm -rf ~/.cache

|

||||||

|

|

||||||

# Download nltk packages as required for unstructured

|

ENTRYPOINT ["sh", "/app/launch.sh"]

|

||||||

# RUN python -c "from unstructured.nlp.tokenize import _download_nltk_packages_if_not_present; _download_nltk_packages_if_not_present()"

|

|

||||||

|

|

||||||

CMD ["python", "app.py"]

|

# Ollama-bundled version

|

||||||

|

FROM full AS ollama

|

||||||

|

|

||||||

|

# Install ollama

|

||||||

|

RUN --mount=type=ssh \

|

||||||

|

--mount=type=cache,target=/root/.cache/pip \

|

||||||

|

curl -fsSL https://ollama.com/install.sh | sh

|

||||||

|

|

||||||

|

# RUN nohup bash -c "ollama serve &" && sleep 4 && ollama pull qwen2.5:7b

|

||||||

|

RUN nohup bash -c "ollama serve &" && sleep 4 && ollama pull nomic-embed-text

|

||||||

|

|

||||||

|

ENTRYPOINT ["sh", "/app/launch.sh"]

|

||||||

|

|||||||

22

README.md

22

README.md

@@ -96,18 +96,7 @@ documents and developers who want to build their own RAG pipeline.

|

|||||||

|

|

||||||

### With Docker (recommended)

|

### With Docker (recommended)

|

||||||

|

|

||||||

1. We support both `lite` & `full` version of Docker images. With `full`, the extra packages of `unstructured` will be installed as well, it can support additional file types (`.doc`, `.docx`, ...) but the cost is larger docker image size. For most users, the `lite` image should work well in most cases.

|

1. We support both `lite` & `full` version of Docker images. With `full` version, the extra packages of `unstructured` will be installed, which can support additional file types (`.doc`, `.docx`, ...) but the cost is larger docker image size. For most users, the `lite` image should work well in most cases.

|

||||||

|

|

||||||

- To use the `lite` version.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

docker run \

|

|

||||||

-e GRADIO_SERVER_NAME=0.0.0.0 \

|

|

||||||

-e GRADIO_SERVER_PORT=7860 \

|

|

||||||

-v ./ktem_app_data:/app/ktem_app_data \

|

|

||||||

-p 7860:7860 -it --rm \

|

|

||||||

ghcr.io/cinnamon/kotaemon:main-lite

|

|

||||||

```

|

|

||||||

|

|

||||||

- To use the `full` version.

|

- To use the `full` version.

|

||||||

|

|

||||||

@@ -124,7 +113,14 @@ documents and developers who want to build their own RAG pipeline.

|

|||||||

|

|

||||||

```bash

|

```bash

|

||||||

# change image name to

|

# change image name to

|

||||||

ghcr.io/cinnamon/kotaemon:feat-ollama_docker-full

|

docker run <...> ghcr.io/cinnamon/kotaemon:main-ollama

|

||||||

|

```

|

||||||

|

|

||||||

|

- To use the `lite` version.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# change image name to

|

||||||

|

docker run <...> ghcr.io/cinnamon/kotaemon:main-lite

|

||||||

```

|

```

|

||||||

|

|

||||||

2. We currently support and test two platforms: `linux/amd64` and `linux/arm64` (for newer Mac). You can specify the platform by passing `--platform` in the `docker run` command. For example:

|

2. We currently support and test two platforms: `linux/amd64` and `linux/arm64` (for newer Mac). You can specify the platform by passing `--platform` in the `docker run` command. For example:

|

||||||

|

|||||||

2

app.py

2

app.py

@@ -3,6 +3,7 @@ import os

|

|||||||

from theflow.settings import settings as flowsettings

|

from theflow.settings import settings as flowsettings

|

||||||

|

|

||||||

KH_APP_DATA_DIR = getattr(flowsettings, "KH_APP_DATA_DIR", ".")

|

KH_APP_DATA_DIR = getattr(flowsettings, "KH_APP_DATA_DIR", ".")

|

||||||

|

KH_GRADIO_SHARE = getattr(flowsettings, "KH_GRADIO_SHARE", False)

|

||||||

GRADIO_TEMP_DIR = os.getenv("GRADIO_TEMP_DIR", None)

|

GRADIO_TEMP_DIR = os.getenv("GRADIO_TEMP_DIR", None)

|

||||||

# override GRADIO_TEMP_DIR if it's not set

|

# override GRADIO_TEMP_DIR if it's not set

|

||||||

if GRADIO_TEMP_DIR is None:

|

if GRADIO_TEMP_DIR is None:

|

||||||

@@ -21,4 +22,5 @@ demo.queue().launch(

|

|||||||

"libs/ktem/ktem/assets",

|

"libs/ktem/ktem/assets",

|

||||||

GRADIO_TEMP_DIR,

|

GRADIO_TEMP_DIR,

|

||||||

],

|

],

|

||||||

|

share=KH_GRADIO_SHARE,

|

||||||

)

|

)

|

||||||

|

|||||||

@@ -4,8 +4,8 @@ An open-source tool for chatting with your documents. Built with both end users

|

|||||||

developers in mind.

|

developers in mind.

|

||||||

|

|

||||||

[Source Code](https://github.com/Cinnamon/kotaemon) |

|

[Source Code](https://github.com/Cinnamon/kotaemon) |

|

||||||

[Live Demo](https://huggingface.co/spaces/cin-model/kotaemon-demo)

|

[HF Space](https://huggingface.co/spaces/cin-model/kotaemon-demo)

|

||||||

|

|

||||||

[User Guide](https://cinnamon.github.io/kotaemon/) |

|

[Installation Guide](https://cinnamon.github.io/kotaemon/) |

|

||||||

[Developer Guide](https://cinnamon.github.io/kotaemon/development/) |

|

[Developer Guide](https://cinnamon.github.io/kotaemon/development/) |

|

||||||

[Feedback](https://github.com/Cinnamon/kotaemon/issues)

|

[Feedback](https://github.com/Cinnamon/kotaemon/issues)

|

||||||

|

|||||||

@@ -1,7 +1,7 @@

|

|||||||

## Installation (Online HuggingFace Space)

|

## Installation (Online HuggingFace Space)

|

||||||

|

|

||||||

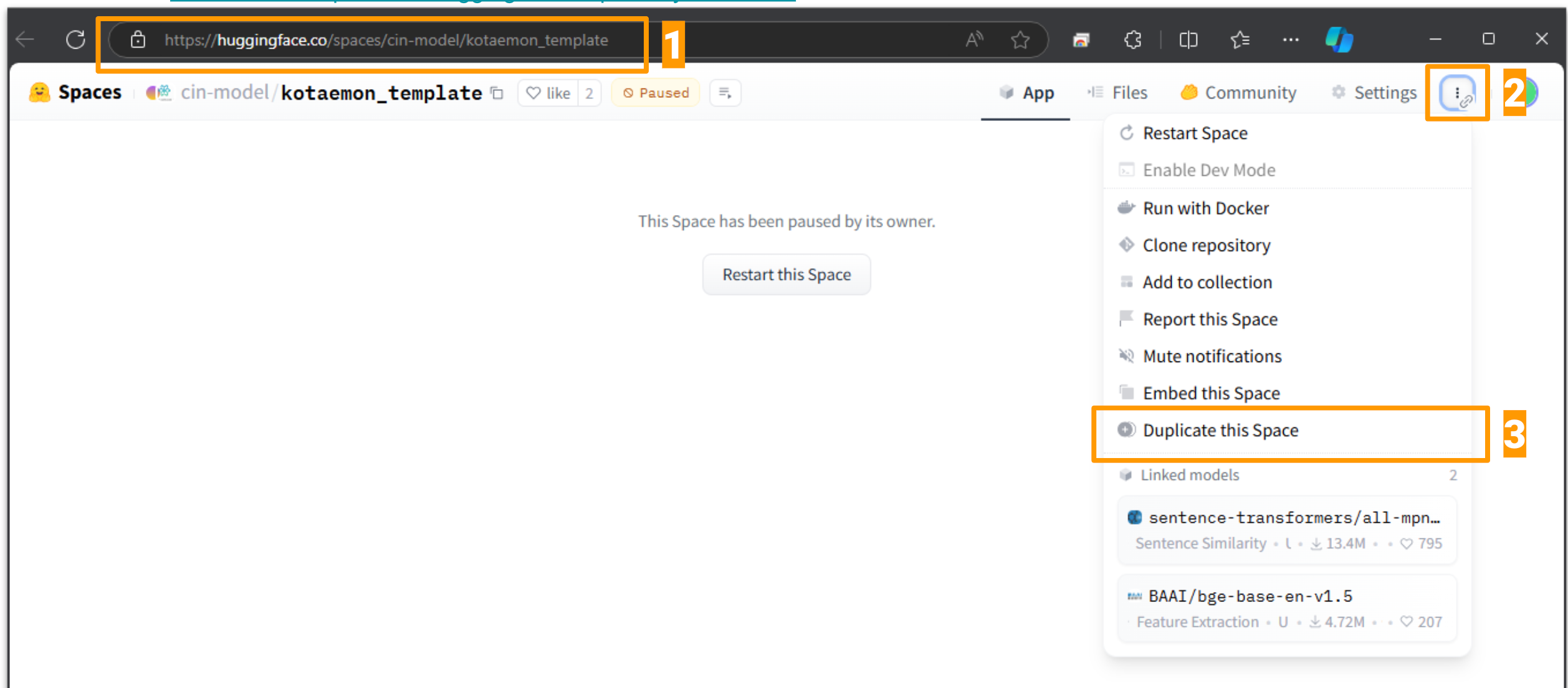

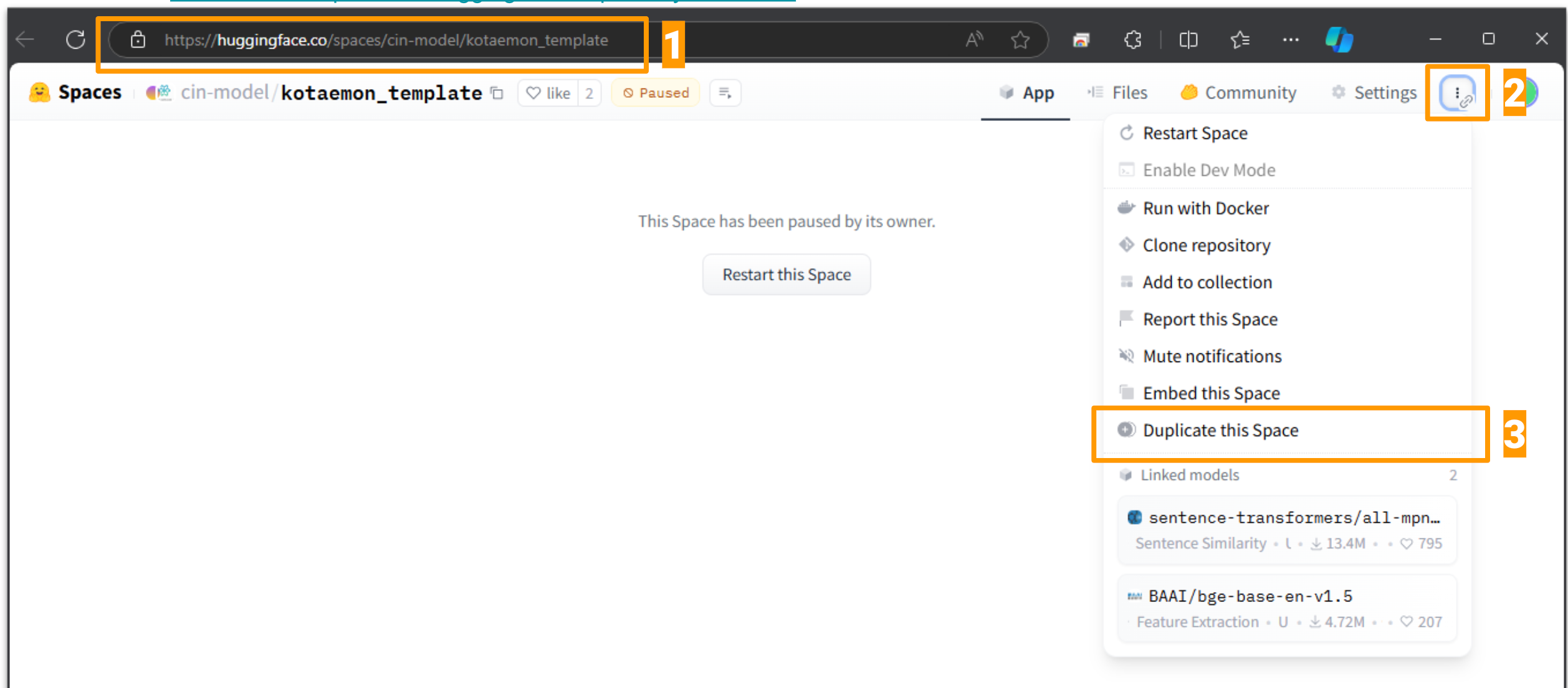

1. Go to [HF kotaemon_template](https://huggingface.co/spaces/cin-model/kotaemon_template).

|

1. Go to [HF kotaemon_template](https://huggingface.co/spaces/cin-model/kotaemon_template).

|

||||||

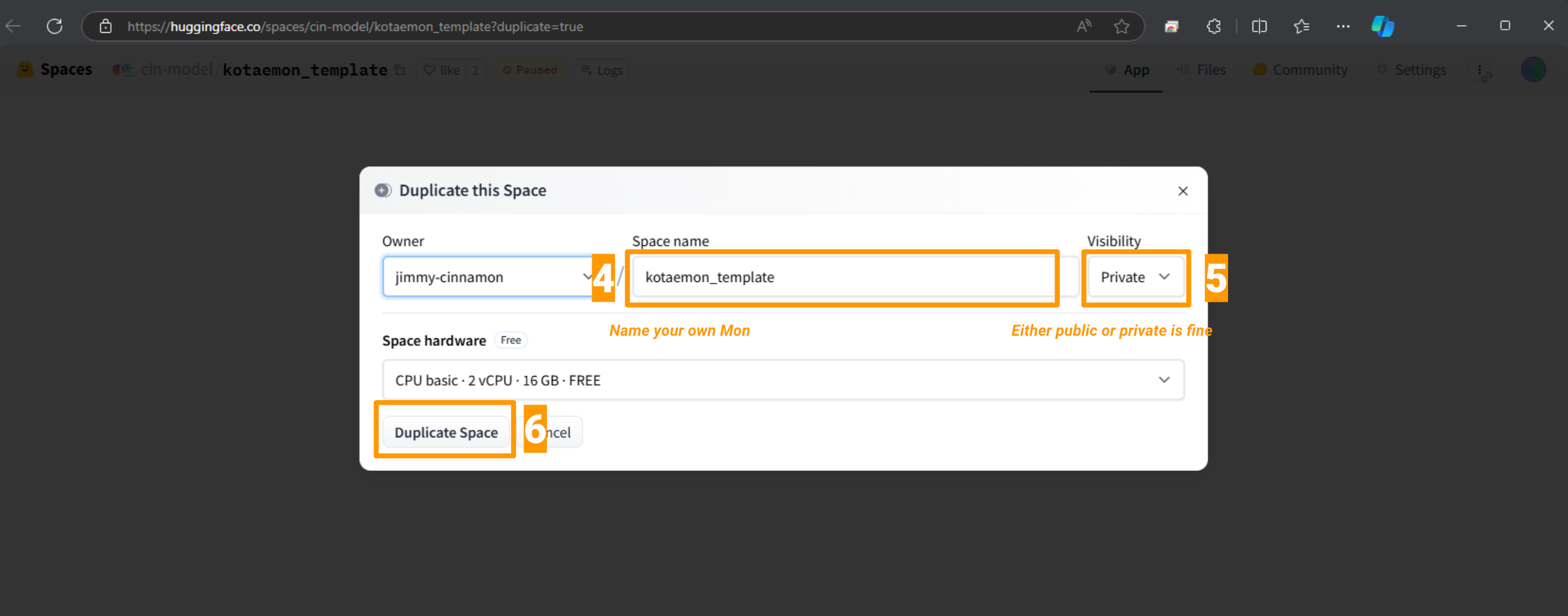

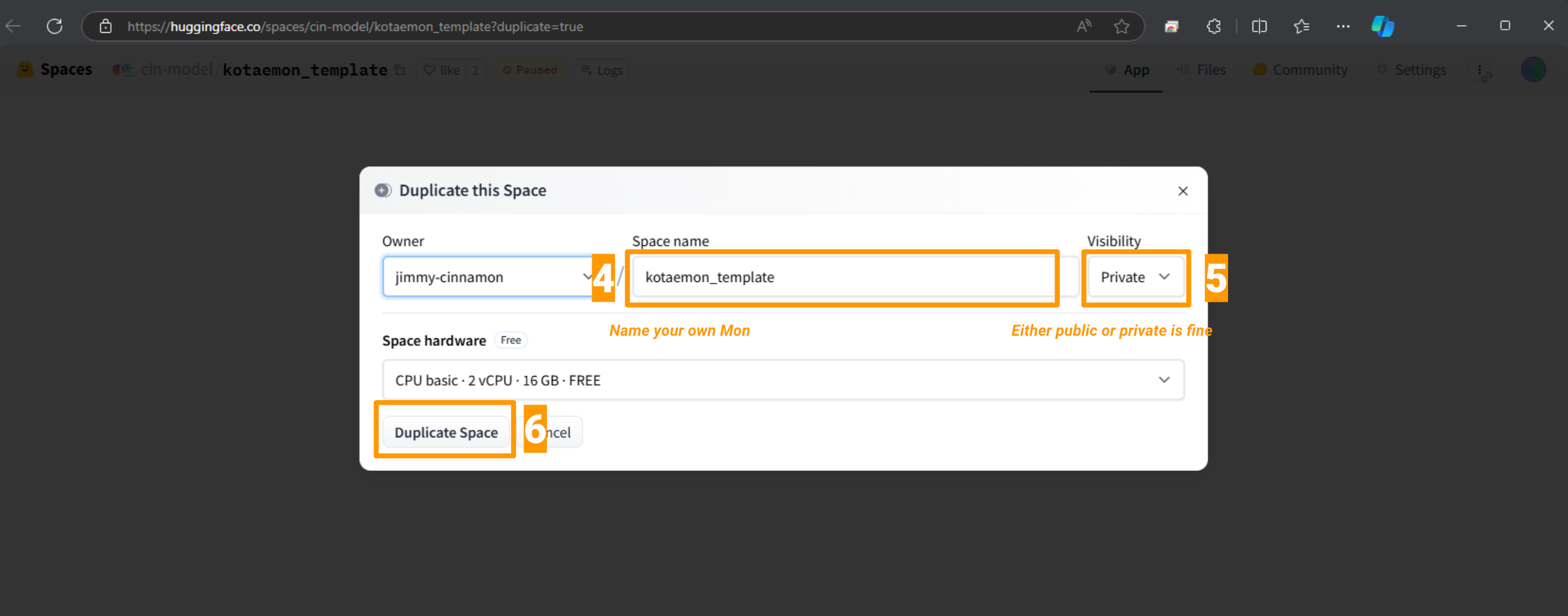

2. Use Duplicate function to create your own space.

|

2. Use Duplicate function to create your own space. Or use this [direct link](https://huggingface.co/spaces/cin-model/kotaemon_template?duplicate=true).

|

||||||

|

|

||||||

|

|

||||||

3. Wait for the build to complete and start up (apprx 10 mins).

|

3. Wait for the build to complete and start up (apprx 10 mins).

|

||||||

|

|||||||

@@ -25,7 +25,8 @@ if not KH_APP_VERSION:

|

|||||||

except Exception:

|

except Exception:

|

||||||

KH_APP_VERSION = "local"

|

KH_APP_VERSION = "local"

|

||||||

|

|

||||||

KH_ENABLE_FIRST_SETUP = True

|

KH_GRADIO_SHARE = config("KH_GRADIO_SHARE", default=False, cast=bool)

|

||||||

|

KH_ENABLE_FIRST_SETUP = config("KH_ENABLE_FIRST_SETUP", default=True, cast=bool)

|

||||||

KH_DEMO_MODE = config("KH_DEMO_MODE", default=False, cast=bool)

|

KH_DEMO_MODE = config("KH_DEMO_MODE", default=False, cast=bool)

|

||||||

KH_OLLAMA_URL = config("KH_OLLAMA_URL", default="http://localhost:11434/v1/")

|

KH_OLLAMA_URL = config("KH_OLLAMA_URL", default="http://localhost:11434/v1/")

|

||||||

|

|

||||||

@@ -65,6 +66,8 @@ os.environ["HF_HUB_CACHE"] = str(KH_APP_DATA_DIR / "huggingface")

|

|||||||

KH_DOC_DIR = this_dir / "docs"

|

KH_DOC_DIR = this_dir / "docs"

|

||||||

|

|

||||||

KH_MODE = "dev"

|

KH_MODE = "dev"

|

||||||

|

KH_SSO_ENABLED = config("KH_SSO_ENABLED", default=False, cast=bool)

|

||||||

|

|

||||||

KH_FEATURE_CHAT_SUGGESTION = config(

|

KH_FEATURE_CHAT_SUGGESTION = config(

|

||||||

"KH_FEATURE_CHAT_SUGGESTION", default=False, cast=bool

|

"KH_FEATURE_CHAT_SUGGESTION", default=False, cast=bool

|

||||||

)

|

)

|

||||||

@@ -137,31 +140,36 @@ if config("AZURE_OPENAI_API_KEY", default="") and config(

|

|||||||

"default": False,

|

"default": False,

|

||||||

}

|

}

|

||||||

|

|

||||||

if config("OPENAI_API_KEY", default=""):

|

OPENAI_DEFAULT = "<YOUR_OPENAI_KEY>"

|

||||||

|

OPENAI_API_KEY = config("OPENAI_API_KEY", default=OPENAI_DEFAULT)

|

||||||

|

GOOGLE_API_KEY = config("GOOGLE_API_KEY", default="your-key")

|

||||||

|

IS_OPENAI_DEFAULT = len(OPENAI_API_KEY) > 0 and OPENAI_API_KEY != OPENAI_DEFAULT

|

||||||

|

|

||||||

|

if OPENAI_API_KEY:

|

||||||

KH_LLMS["openai"] = {

|

KH_LLMS["openai"] = {

|

||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.llms.ChatOpenAI",

|

"__type__": "kotaemon.llms.ChatOpenAI",

|

||||||

"temperature": 0,

|

"temperature": 0,

|

||||||

"base_url": config("OPENAI_API_BASE", default="")

|

"base_url": config("OPENAI_API_BASE", default="")

|

||||||

or "https://api.openai.com/v1",

|

or "https://api.openai.com/v1",

|

||||||

"api_key": config("OPENAI_API_KEY", default=""),

|

"api_key": OPENAI_API_KEY,

|

||||||

"model": config("OPENAI_CHAT_MODEL", default="gpt-3.5-turbo"),

|

"model": config("OPENAI_CHAT_MODEL", default="gpt-4o-mini"),

|

||||||

"timeout": 20,

|

"timeout": 20,

|

||||||

},

|

},

|

||||||

"default": True,

|

"default": IS_OPENAI_DEFAULT,

|

||||||

}

|

}

|

||||||

KH_EMBEDDINGS["openai"] = {

|

KH_EMBEDDINGS["openai"] = {

|

||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

|

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

|

||||||

"base_url": config("OPENAI_API_BASE", default="https://api.openai.com/v1"),

|

"base_url": config("OPENAI_API_BASE", default="https://api.openai.com/v1"),

|

||||||

"api_key": config("OPENAI_API_KEY", default=""),

|

"api_key": OPENAI_API_KEY,

|

||||||

"model": config(

|

"model": config(

|

||||||

"OPENAI_EMBEDDINGS_MODEL", default="text-embedding-ada-002"

|

"OPENAI_EMBEDDINGS_MODEL", default="text-embedding-3-large"

|

||||||

),

|

),

|

||||||

"timeout": 10,

|

"timeout": 10,

|

||||||

"context_length": 8191,

|

"context_length": 8191,

|

||||||

},

|

},

|

||||||

"default": True,

|

"default": IS_OPENAI_DEFAULT,

|

||||||

}

|

}

|

||||||

|

|

||||||

if config("LOCAL_MODEL", default=""):

|

if config("LOCAL_MODEL", default=""):

|

||||||

@@ -169,11 +177,21 @@ if config("LOCAL_MODEL", default=""):

|

|||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.llms.ChatOpenAI",

|

"__type__": "kotaemon.llms.ChatOpenAI",

|

||||||

"base_url": KH_OLLAMA_URL,

|

"base_url": KH_OLLAMA_URL,

|

||||||

"model": config("LOCAL_MODEL", default="llama3.1:8b"),

|

"model": config("LOCAL_MODEL", default="qwen2.5:7b"),

|

||||||

"api_key": "ollama",

|

"api_key": "ollama",

|

||||||

},

|

},

|

||||||

"default": False,

|

"default": False,

|

||||||

}

|

}

|

||||||

|

KH_LLMS["ollama-long-context"] = {

|

||||||

|

"spec": {

|

||||||

|

"__type__": "kotaemon.llms.LCOllamaChat",

|

||||||

|

"base_url": KH_OLLAMA_URL.replace("v1/", ""),

|

||||||

|

"model": config("LOCAL_MODEL", default="qwen2.5:7b"),

|

||||||

|

"num_ctx": 8192,

|

||||||

|

},

|

||||||

|

"default": False,

|

||||||

|

}

|

||||||

|

|

||||||

KH_EMBEDDINGS["ollama"] = {

|

KH_EMBEDDINGS["ollama"] = {

|

||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

|

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

|

||||||

@@ -183,7 +201,6 @@ if config("LOCAL_MODEL", default=""):

|

|||||||

},

|

},

|

||||||

"default": False,

|

"default": False,

|

||||||

}

|

}

|

||||||

|

|

||||||

KH_EMBEDDINGS["fast_embed"] = {

|

KH_EMBEDDINGS["fast_embed"] = {

|

||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.embeddings.FastEmbedEmbeddings",

|

"__type__": "kotaemon.embeddings.FastEmbedEmbeddings",

|

||||||

@@ -205,9 +222,9 @@ KH_LLMS["google"] = {

|

|||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.llms.chats.LCGeminiChat",

|

"__type__": "kotaemon.llms.chats.LCGeminiChat",

|

||||||

"model_name": "gemini-1.5-flash",

|

"model_name": "gemini-1.5-flash",

|

||||||

"api_key": config("GOOGLE_API_KEY", default="your-key"),

|

"api_key": GOOGLE_API_KEY,

|

||||||

},

|

},

|

||||||

"default": False,

|

"default": not IS_OPENAI_DEFAULT,

|

||||||

}

|

}

|

||||||

KH_LLMS["groq"] = {

|

KH_LLMS["groq"] = {

|

||||||

"spec": {

|

"spec": {

|

||||||

@@ -241,8 +258,9 @@ KH_EMBEDDINGS["google"] = {

|

|||||||

"spec": {

|

"spec": {

|

||||||

"__type__": "kotaemon.embeddings.LCGoogleEmbeddings",

|

"__type__": "kotaemon.embeddings.LCGoogleEmbeddings",

|

||||||

"model": "models/text-embedding-004",

|

"model": "models/text-embedding-004",

|

||||||

"google_api_key": config("GOOGLE_API_KEY", default="your-key"),

|

"google_api_key": GOOGLE_API_KEY,

|

||||||

}

|

},

|

||||||

|

"default": not IS_OPENAI_DEFAULT,

|

||||||

}

|

}

|

||||||

# KH_EMBEDDINGS["huggingface"] = {

|

# KH_EMBEDDINGS["huggingface"] = {

|

||||||

# "spec": {

|

# "spec": {

|

||||||

@@ -301,9 +319,12 @@ SETTINGS_REASONING = {

|

|||||||

|

|

||||||

USE_NANO_GRAPHRAG = config("USE_NANO_GRAPHRAG", default=False, cast=bool)

|

USE_NANO_GRAPHRAG = config("USE_NANO_GRAPHRAG", default=False, cast=bool)

|

||||||

USE_LIGHTRAG = config("USE_LIGHTRAG", default=True, cast=bool)

|

USE_LIGHTRAG = config("USE_LIGHTRAG", default=True, cast=bool)

|

||||||

|

USE_MS_GRAPHRAG = config("USE_MS_GRAPHRAG", default=True, cast=bool)

|

||||||

|

|

||||||

GRAPHRAG_INDEX_TYPES = ["ktem.index.file.graph.GraphRAGIndex"]

|

GRAPHRAG_INDEX_TYPES = []

|

||||||

|

|

||||||

|

if USE_MS_GRAPHRAG:

|

||||||

|

GRAPHRAG_INDEX_TYPES.append("ktem.index.file.graph.GraphRAGIndex")

|

||||||

if USE_NANO_GRAPHRAG:

|

if USE_NANO_GRAPHRAG:

|

||||||

GRAPHRAG_INDEX_TYPES.append("ktem.index.file.graph.NanoGraphRAGIndex")

|

GRAPHRAG_INDEX_TYPES.append("ktem.index.file.graph.NanoGraphRAGIndex")

|

||||||

if USE_LIGHTRAG:

|

if USE_LIGHTRAG:

|

||||||

@@ -323,7 +344,7 @@ GRAPHRAG_INDICES = [

|

|||||||

".png, .jpeg, .jpg, .tiff, .tif, .pdf, .xls, .xlsx, .doc, .docx, "

|

".png, .jpeg, .jpg, .tiff, .tif, .pdf, .xls, .xlsx, .doc, .docx, "

|

||||||

".pptx, .csv, .html, .mhtml, .txt, .md, .zip"

|

".pptx, .csv, .html, .mhtml, .txt, .md, .zip"

|

||||||

),

|

),

|

||||||

"private": False,

|

"private": True,

|

||||||

},

|

},

|

||||||

"index_type": graph_type,

|

"index_type": graph_type,

|

||||||

}

|

}

|

||||||

@@ -338,7 +359,7 @@ KH_INDICES = [

|

|||||||

".png, .jpeg, .jpg, .tiff, .tif, .pdf, .xls, .xlsx, .doc, .docx, "

|

".png, .jpeg, .jpg, .tiff, .tif, .pdf, .xls, .xlsx, .doc, .docx, "

|

||||||

".pptx, .csv, .html, .mhtml, .txt, .md, .zip"

|

".pptx, .csv, .html, .mhtml, .txt, .md, .zip"

|

||||||

),

|

),

|

||||||

"private": False,

|

"private": True,

|

||||||

},

|

},

|

||||||

"index_type": "ktem.index.file.FileIndex",

|

"index_type": "ktem.index.file.FileIndex",

|

||||||

},

|

},

|

||||||

|

|||||||

26

fly.toml

Normal file

26

fly.toml

Normal file

@@ -0,0 +1,26 @@

|

|||||||

|

# fly.toml app configuration file generated for kotaemon on 2024-12-24T20:56:32+07:00

|

||||||

|

#

|

||||||

|

# See https://fly.io/docs/reference/configuration/ for information about how to use this file.

|

||||||

|

#

|

||||||

|

|

||||||

|

app = 'kotaemon'

|

||||||

|

primary_region = 'sin'

|

||||||

|

|

||||||

|

[build]

|

||||||

|

|

||||||

|

[mounts]

|

||||||

|

destination = "/app/ktem_app_data"

|

||||||

|

source = "ktem_volume"

|

||||||

|

|

||||||

|

[http_service]

|

||||||

|

internal_port = 7860

|

||||||

|

force_https = true

|

||||||

|

auto_stop_machines = 'suspend'

|

||||||

|

auto_start_machines = true

|

||||||

|

min_machines_running = 0

|

||||||

|

processes = ['app']

|

||||||

|

|

||||||

|

[[vm]]

|

||||||

|

memory = '4gb'

|

||||||

|

cpu_kind = 'shared'

|

||||||

|

cpus = 4

|

||||||

23

launch.sh

Executable file

23

launch.sh

Executable file

@@ -0,0 +1,23 @@

|

|||||||

|

#!/bin/bash

|

||||||

|

|

||||||

|

if [ -z "$GRADIO_SERVER_NAME" ]; then

|

||||||

|

export GRADIO_SERVER_NAME="0.0.0.0"

|

||||||

|

fi

|

||||||

|

if [ -z "$GRADIO_SERVER_PORT" ]; then

|

||||||

|

export GRADIO_SERVER_PORT="7860"

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Check if environment variable KH_DEMO_MODE is set to true

|

||||||

|

if [ "$KH_DEMO_MODE" = "true" ]; then

|

||||||

|

echo "KH_DEMO_MODE is true. Launching in demo mode..."

|

||||||

|

# Command to launch in demo mode

|

||||||

|

GR_FILE_ROOT_PATH="/app" KH_FEATURE_USER_MANAGEMENT=false USE_LIGHTRAG=false uvicorn sso_app_demo:app --host "$GRADIO_SERVER_NAME" --port "$GRADIO_SERVER_PORT"

|

||||||

|

else

|

||||||

|

if [ "$KH_SSO_ENABLED" = "true" ]; then

|

||||||

|

echo "KH_SSO_ENABLED is true. Launching in SSO mode..."

|

||||||

|

GR_FILE_ROOT_PATH="/app" KH_SSO_ENABLED=true uvicorn sso_app:app --host "$GRADIO_SERVER_NAME" --port "$GRADIO_SERVER_PORT"

|

||||||

|

else

|

||||||

|

ollama serve &

|

||||||

|

python app.py

|

||||||

|

fi

|

||||||

|

fi

|

||||||

@@ -3,6 +3,7 @@ from collections import defaultdict

|

|||||||

from typing import Generator

|

from typing import Generator

|

||||||

|

|

||||||

import numpy as np

|

import numpy as np

|

||||||

|

from decouple import config

|

||||||

from theflow.settings import settings as flowsettings

|

from theflow.settings import settings as flowsettings

|

||||||

|

|

||||||

from kotaemon.base import (

|

from kotaemon.base import (

|

||||||

@@ -32,7 +33,9 @@ except ImportError:

|

|||||||

|

|

||||||

MAX_IMAGES = 10

|

MAX_IMAGES = 10

|

||||||

CITATION_TIMEOUT = 5.0

|

CITATION_TIMEOUT = 5.0

|

||||||

CONTEXT_RELEVANT_WARNING_SCORE = 0.7

|

CONTEXT_RELEVANT_WARNING_SCORE = config(

|

||||||

|

"CONTEXT_RELEVANT_WARNING_SCORE", 0.3, cast=float

|

||||||

|

)

|

||||||

|

|

||||||

DEFAULT_QA_TEXT_PROMPT = (

|

DEFAULT_QA_TEXT_PROMPT = (

|

||||||

"Use the following pieces of context to answer the question at the end in detail with clear explanation. " # noqa: E501

|

"Use the following pieces of context to answer the question at the end in detail with clear explanation. " # noqa: E501

|

||||||

@@ -385,7 +388,9 @@ class AnswerWithContextPipeline(BaseComponent):

|

|||||||

doc = id2docs[id_]

|

doc = id2docs[id_]

|

||||||

doc_score = doc.metadata.get("llm_trulens_score", 0.0)

|

doc_score = doc.metadata.get("llm_trulens_score", 0.0)

|

||||||

is_open = not has_llm_score or (

|

is_open = not has_llm_score or (

|

||||||

doc_score > CONTEXT_RELEVANT_WARNING_SCORE and len(with_citation) == 0

|

doc_score

|

||||||

|

> CONTEXT_RELEVANT_WARNING_SCORE

|

||||||

|

# and len(with_citation) == 0

|

||||||

)

|

)

|

||||||

without_citation.append(

|

without_citation.append(

|

||||||

Document(

|

Document(

|

||||||

|

|||||||

@@ -2,6 +2,8 @@ from difflib import SequenceMatcher

|

|||||||

|

|

||||||

|

|

||||||

def find_text(search_span, context, min_length=5):

|

def find_text(search_span, context, min_length=5):

|

||||||

|

search_span, context = search_span.lower(), context.lower()

|

||||||

|

|

||||||

sentence_list = search_span.split("\n")

|

sentence_list = search_span.split("\n")

|

||||||

context = context.replace("\n", " ")

|

context = context.replace("\n", " ")

|

||||||

|

|

||||||

@@ -18,7 +20,7 @@ def find_text(search_span, context, min_length=5):

|

|||||||

|

|

||||||

matched_blocks = []

|

matched_blocks = []

|

||||||

for _, start, length in match_results:

|

for _, start, length in match_results:

|

||||||

if length > max(len(sentence) * 0.2, min_length):

|

if length > max(len(sentence) * 0.25, min_length):

|

||||||

matched_blocks.append((start, start + length))

|

matched_blocks.append((start, start + length))

|

||||||

|

|

||||||

if matched_blocks:

|

if matched_blocks:

|

||||||

@@ -42,6 +44,9 @@ def find_text(search_span, context, min_length=5):

|

|||||||

def find_start_end_phrase(

|

def find_start_end_phrase(

|

||||||

start_phrase, end_phrase, context, min_length=5, max_excerpt_length=300

|

start_phrase, end_phrase, context, min_length=5, max_excerpt_length=300

|

||||||

):

|

):

|

||||||

|

start_phrase, end_phrase = start_phrase.lower(), end_phrase.lower()

|

||||||

|

context = context.lower()

|

||||||

|

|

||||||

context = context.replace("\n", " ")

|

context = context.replace("\n", " ")

|

||||||

|

|

||||||

matches = []

|

matches = []

|

||||||

|

|||||||

@@ -177,7 +177,11 @@ class VectorRetrieval(BaseRetrieval):

|

|||||||

]

|

]

|

||||||

elif self.retrieval_mode == "text":

|

elif self.retrieval_mode == "text":

|

||||||

query = text.text if isinstance(text, Document) else text

|

query = text.text if isinstance(text, Document) else text

|

||||||

docs = self.doc_store.query(query, top_k=top_k_first_round, doc_ids=scope)

|

docs = []

|

||||||

|

if scope:

|

||||||

|

docs = self.doc_store.query(

|

||||||

|

query, top_k=top_k_first_round, doc_ids=scope

|

||||||

|

)

|

||||||

result = [RetrievedDocument(**doc.to_dict(), score=-1.0) for doc in docs]

|

result = [RetrievedDocument(**doc.to_dict(), score=-1.0) for doc in docs]

|

||||||

elif self.retrieval_mode == "hybrid":

|

elif self.retrieval_mode == "hybrid":

|

||||||

# similarity search section

|

# similarity search section

|

||||||

@@ -206,6 +210,7 @@ class VectorRetrieval(BaseRetrieval):

|

|||||||

|

|

||||||

assert self.doc_store is not None

|

assert self.doc_store is not None

|

||||||

query = text.text if isinstance(text, Document) else text

|

query = text.text if isinstance(text, Document) else text

|

||||||

|

if scope:

|

||||||

ds_docs = self.doc_store.query(

|

ds_docs = self.doc_store.query(

|

||||||

query, top_k=top_k_first_round, doc_ids=scope

|

query, top_k=top_k_first_round, doc_ids=scope

|

||||||

)

|

)

|

||||||

|

|||||||

@@ -12,6 +12,7 @@ from .chats import (

|

|||||||

LCChatOpenAI,

|

LCChatOpenAI,

|

||||||

LCCohereChat,

|

LCCohereChat,

|

||||||

LCGeminiChat,

|

LCGeminiChat,

|

||||||

|

LCOllamaChat,

|

||||||

LlamaCppChat,

|

LlamaCppChat,

|

||||||

)

|

)

|

||||||

from .completions import LLM, AzureOpenAI, LlamaCpp, OpenAI

|

from .completions import LLM, AzureOpenAI, LlamaCpp, OpenAI

|

||||||

@@ -33,6 +34,7 @@ __all__ = [

|

|||||||

"LCAnthropicChat",

|

"LCAnthropicChat",

|

||||||

"LCGeminiChat",

|

"LCGeminiChat",

|

||||||

"LCCohereChat",

|

"LCCohereChat",

|

||||||

|

"LCOllamaChat",

|

||||||

"LCAzureChatOpenAI",

|

"LCAzureChatOpenAI",

|

||||||

"LCChatOpenAI",

|

"LCChatOpenAI",

|

||||||

"LlamaCppChat",

|

"LlamaCppChat",

|

||||||

|

|||||||

@@ -7,6 +7,7 @@ from .langchain_based import (

|

|||||||

LCChatOpenAI,

|

LCChatOpenAI,

|

||||||

LCCohereChat,

|

LCCohereChat,

|

||||||

LCGeminiChat,

|

LCGeminiChat,

|

||||||

|

LCOllamaChat,

|

||||||

)

|

)

|

||||||

from .llamacpp import LlamaCppChat

|

from .llamacpp import LlamaCppChat

|

||||||

from .openai import AzureChatOpenAI, ChatOpenAI

|

from .openai import AzureChatOpenAI, ChatOpenAI

|

||||||

@@ -20,6 +21,7 @@ __all__ = [

|

|||||||

"LCAnthropicChat",

|

"LCAnthropicChat",

|

||||||

"LCGeminiChat",

|

"LCGeminiChat",

|

||||||

"LCCohereChat",

|

"LCCohereChat",

|

||||||

|

"LCOllamaChat",

|

||||||

"LCChatOpenAI",

|

"LCChatOpenAI",

|

||||||

"LCAzureChatOpenAI",

|

"LCAzureChatOpenAI",

|

||||||

"LCChatMixin",

|

"LCChatMixin",

|

||||||

|

|||||||

@@ -358,3 +358,40 @@ class LCCohereChat(LCChatMixin, ChatLLM): # type: ignore

|

|||||||

raise ImportError("Please install langchain-cohere")

|

raise ImportError("Please install langchain-cohere")

|

||||||

|

|

||||||

return ChatCohere

|

return ChatCohere

|

||||||

|

|

||||||

|

|

||||||

|

class LCOllamaChat(LCChatMixin, ChatLLM): # type: ignore

|

||||||

|

base_url: str = Param(

|

||||||

|

help="Base Ollama URL. (default: http://localhost:11434/api/)", # noqa

|

||||||

|

required=True,

|

||||||

|

)

|

||||||

|

model: str = Param(

|

||||||

|

help="Model name to use (https://ollama.com/library)",

|

||||||

|

required=True,

|

||||||

|

)

|

||||||

|

num_ctx: int = Param(

|

||||||

|

help="The size of the context window (default: 8192)",

|

||||||

|

required=True,

|

||||||

|

)

|

||||||

|

|

||||||

|

def __init__(

|

||||||

|

self,

|

||||||

|

model: str | None = None,

|

||||||

|

base_url: str | None = None,

|

||||||

|

num_ctx: int | None = None,

|

||||||

|

**params,

|

||||||

|

):

|

||||||

|

super().__init__(

|

||||||

|

base_url=base_url,

|

||||||

|

model=model,

|

||||||

|

num_ctx=num_ctx,

|

||||||

|

**params,

|

||||||

|

)

|

||||||

|

|

||||||

|

def _get_lc_class(self):

|

||||||

|

try:

|

||||||

|

from langchain_ollama import ChatOllama

|

||||||

|

except ImportError:

|

||||||

|

raise ImportError("Please install langchain-ollama")

|

||||||

|

|

||||||

|

return ChatOllama

|

||||||

|

|||||||

@@ -3,15 +3,18 @@ from io import BytesIO

|

|||||||

from pathlib import Path

|

from pathlib import Path

|

||||||

from typing import Dict, List, Optional

|

from typing import Dict, List, Optional

|

||||||

|

|

||||||

|

from decouple import config

|

||||||

from fsspec import AbstractFileSystem

|

from fsspec import AbstractFileSystem

|

||||||

from llama_index.readers.file import PDFReader

|

from llama_index.readers.file import PDFReader

|

||||||

from PIL import Image

|

from PIL import Image

|

||||||

|

|

||||||

from kotaemon.base import Document

|

from kotaemon.base import Document

|

||||||

|

|

||||||

|

PDF_LOADER_DPI = config("PDF_LOADER_DPI", default=40, cast=int)

|

||||||

|

|

||||||

|

|

||||||

def get_page_thumbnails(

|

def get_page_thumbnails(

|

||||||

file_path: Path, pages: list[int], dpi: int = 80

|

file_path: Path, pages: list[int], dpi: int = PDF_LOADER_DPI

|

||||||

) -> List[Image.Image]:

|

) -> List[Image.Image]:

|

||||||

"""Get image thumbnails of the pages in the PDF file.

|

"""Get image thumbnails of the pages in the PDF file.

|

||||||

|

|

||||||

|

|||||||

@@ -35,6 +35,7 @@ dependencies = [

|

|||||||

"langchain-openai>=0.1.4,<0.2.0",

|

"langchain-openai>=0.1.4,<0.2.0",

|

||||||

"langchain-google-genai>=1.0.3,<2.0.0",

|

"langchain-google-genai>=1.0.3,<2.0.0",

|

||||||

"langchain-anthropic",

|

"langchain-anthropic",

|

||||||

|

"langchain-ollama",

|

||||||

"langchain-cohere>=0.2.4,<0.3.0",

|

"langchain-cohere>=0.2.4,<0.3.0",

|

||||||

"llama-hub>=0.0.79,<0.1.0",

|

"llama-hub>=0.0.79,<0.1.0",

|

||||||

"llama-index>=0.10.40,<0.11.0",

|

"llama-index>=0.10.40,<0.11.0",

|

||||||

|

|||||||

@@ -13,7 +13,7 @@ from ktem.settings import BaseSettingGroup, SettingGroup, SettingReasoningGroup

|

|||||||

from theflow.settings import settings

|

from theflow.settings import settings

|

||||||

from theflow.utils.modules import import_dotted_string

|

from theflow.utils.modules import import_dotted_string

|

||||||

|

|

||||||

BASE_PATH = os.environ.get("GRADIO_ROOT_PATH", "")

|

BASE_PATH = os.environ.get("GR_FILE_ROOT_PATH", "")

|

||||||

|

|

||||||

|

|

||||||

class BaseApp:

|

class BaseApp:

|

||||||

@@ -57,7 +57,7 @@ class BaseApp:

|

|||||||

self._pdf_view_js = self._pdf_view_js.replace(

|

self._pdf_view_js = self._pdf_view_js.replace(

|

||||||

"PDFJS_PREBUILT_DIR",

|

"PDFJS_PREBUILT_DIR",

|

||||||

pdf_js_dist_dir,

|

pdf_js_dist_dir,

|

||||||

).replace("GRADIO_ROOT_PATH", BASE_PATH)

|

).replace("GR_FILE_ROOT_PATH", BASE_PATH)

|

||||||

with (dir_assets / "js" / "svg-pan-zoom.min.js").open() as fi:

|

with (dir_assets / "js" / "svg-pan-zoom.min.js").open() as fi:

|

||||||

self._svg_js = fi.read()

|

self._svg_js = fi.read()

|

||||||

|

|

||||||

@@ -79,7 +79,7 @@ class BaseApp:

|

|||||||

self.default_settings.index.finalize()

|

self.default_settings.index.finalize()

|

||||||

self.settings_state = gr.State(self.default_settings.flatten())

|

self.settings_state = gr.State(self.default_settings.flatten())

|

||||||

|

|

||||||

self.user_id = gr.State(1 if not self.f_user_management else None)

|

self.user_id = gr.State("default" if not self.f_user_management else None)

|

||||||

|

|

||||||

def initialize_indices(self):

|

def initialize_indices(self):

|

||||||

"""Create the index manager, start indices, and register to app settings"""

|

"""Create the index manager, start indices, and register to app settings"""

|

||||||

@@ -173,15 +173,25 @@ class BaseApp:

|

|||||||

"""Called when the app is created"""

|

"""Called when the app is created"""

|

||||||

|

|

||||||

def make(self):

|

def make(self):

|

||||||

|

markmap_js = """

|

||||||

|

<script>

|

||||||

|

window.markmap = {

|

||||||

|

/** @type AutoLoaderOptions */

|

||||||

|

autoLoader: {

|

||||||

|

toolbar: true, // Enable toolbar

|

||||||

|

},

|

||||||

|

};

|

||||||

|

</script>

|

||||||

|

"""

|

||||||

external_js = (

|

external_js = (

|

||||||

"<script type='module' "

|

"<script type='module' "

|

||||||

"src='https://cdn.skypack.dev/pdfjs-viewer-element'>"

|

"src='https://cdn.skypack.dev/pdfjs-viewer-element'>"

|

||||||

"</script>"

|

"</script>"

|

||||||

"<script>"

|

|

||||||

f"{self._svg_js}"

|

|

||||||

"</script>"

|

|

||||||

"<script type='module' "

|

"<script type='module' "

|

||||||

"src='https://cdnjs.cloudflare.com/ajax/libs/tributejs/5.1.3/tribute.min.js'>" # noqa

|

"src='https://cdnjs.cloudflare.com/ajax/libs/tributejs/5.1.3/tribute.min.js'>" # noqa

|

||||||

|

f"{markmap_js}"

|

||||||

|

"<script src='https://cdn.jsdelivr.net/npm/markmap-autoloader@0.16'></script>" # noqa

|

||||||

|

"<script src='https://cdn.jsdelivr.net/npm/minisearch@7.1.1/dist/umd/index.min.js'></script>" # noqa

|

||||||

"</script>"

|

"</script>"

|

||||||

"<link rel='stylesheet' href='https://cdnjs.cloudflare.com/ajax/libs/tributejs/5.1.3/tribute.css'/>" # noqa

|

"<link rel='stylesheet' href='https://cdnjs.cloudflare.com/ajax/libs/tributejs/5.1.3/tribute.css'/>" # noqa

|

||||||

)

|

)

|

||||||

|

|||||||

@@ -326,7 +326,12 @@ pdfjs-viewer-element {

|

|||||||

|

|

||||||

/* Switch checkbox styles */

|

/* Switch checkbox styles */

|

||||||

|

|

||||||

#is-public-checkbox {

|

/* #is-public-checkbox {

|

||||||

|

position: relative;

|

||||||

|

top: 4px;

|

||||||

|

} */

|

||||||

|

|

||||||

|

#suggest-chat-checkbox {

|

||||||

position: relative;

|

position: relative;

|

||||||

top: 4px;

|

top: 4px;

|

||||||

}

|

}

|

||||||

@@ -411,3 +416,43 @@ details.evidence {

|

|||||||

tbody:not(.row_odd) {

|

tbody:not(.row_odd) {

|

||||||

background: var(--table-even-background-fill);

|

background: var(--table-even-background-fill);

|

||||||

}

|

}

|

||||||

|

|

||||||

|

#chat-suggestion {

|

||||||

|

max-height: 350px;

|

||||||

|

}

|

||||||

|

|

||||||

|

#chat-suggestion table {

|

||||||

|

overflow: hidden;

|

||||||

|

}

|

||||||

|

|

||||||

|

#chat-suggestion table thead {

|

||||||

|

display: none;

|

||||||

|

}

|

||||||

|

|

||||||

|

#paper-suggestion table {

|

||||||

|

overflow: hidden;

|

||||||

|

}

|

||||||

|

|

||||||

|

svg.markmap {

|

||||||

|

width: 100%;

|

||||||

|

height: 100%;

|

||||||

|

font-family: Quicksand, sans-serif;

|

||||||

|

font-size: 15px;

|

||||||

|

}

|

||||||

|

|

||||||

|

div.markmap {

|

||||||

|

height: 400px;

|

||||||

|

}

|

||||||

|

|

||||||

|

#google-login {

|

||||||

|

max-width: 450px;

|

||||||

|

}

|

||||||

|

|

||||||

|

#user-api-key-wrapper {

|

||||||

|

max-width: 450px;

|

||||||

|

}

|

||||||

|

|

||||||

|

#login-row {

|

||||||

|

display: grid;

|

||||||

|

place-items: center;

|

||||||

|

}

|

||||||

|

|||||||

@@ -11,10 +11,25 @@ function run() {

|

|||||||

version_node.style = "position: fixed; top: 10px; right: 10px;";

|

version_node.style = "position: fixed; top: 10px; right: 10px;";

|

||||||

main_parent.appendChild(version_node);

|

main_parent.appendChild(version_node);

|

||||||

|

|

||||||

|

// add favicon

|

||||||

|

const favicon = document.createElement("link");

|

||||||

|

// set favicon attributes

|

||||||

|

favicon.rel = "icon";

|

||||||

|

favicon.type = "image/svg+xml";

|

||||||

|

favicon.href = "/favicon.ico";

|

||||||

|

document.head.appendChild(favicon);

|

||||||

|

|

||||||

|

// setup conversation dropdown placeholder

|

||||||

|

let conv_dropdown = document.querySelector("#conversation-dropdown input");

|

||||||

|

conv_dropdown.placeholder = "Browse conversation";

|

||||||

|

|

||||||

// move info-expand-button

|

// move info-expand-button

|

||||||

let info_expand_button = document.getElementById("info-expand-button");

|

let info_expand_button = document.getElementById("info-expand-button");

|

||||||

let chat_info_panel = document.getElementById("info-expand");

|

let chat_info_panel = document.getElementById("info-expand");

|

||||||

chat_info_panel.insertBefore(info_expand_button, chat_info_panel.childNodes[2]);

|

chat_info_panel.insertBefore(

|

||||||

|

info_expand_button,

|

||||||

|

chat_info_panel.childNodes[2]

|

||||||

|

);

|

||||||

|

|

||||||

// move toggle-side-bar button

|

// move toggle-side-bar button

|

||||||

let chat_expand_button = document.getElementById("chat-expand-button");

|

let chat_expand_button = document.getElementById("chat-expand-button");

|

||||||

@@ -24,22 +39,24 @@ function run() {

|

|||||||

// move setting close button

|

// move setting close button

|

||||||

let setting_tab_nav_bar = document.querySelector("#settings-tab .tab-nav");

|

let setting_tab_nav_bar = document.querySelector("#settings-tab .tab-nav");

|

||||||

let setting_close_button = document.getElementById("save-setting-btn");

|

let setting_close_button = document.getElementById("save-setting-btn");

|

||||||

|

if (setting_close_button) {

|

||||||

setting_tab_nav_bar.appendChild(setting_close_button);

|

setting_tab_nav_bar.appendChild(setting_close_button);

|

||||||

|

}

|

||||||

|

|

||||||

let default_conv_column_min_width = "min(300px, 100%)";

|

let default_conv_column_min_width = "min(300px, 100%)";

|

||||||

conv_column.style.minWidth = default_conv_column_min_width

|

conv_column.style.minWidth = default_conv_column_min_width;

|

||||||

|

|

||||||

globalThis.toggleChatColumn = (() => {

|

globalThis.toggleChatColumn = () => {

|

||||||

/* get flex-grow value of chat_column */

|

/* get flex-grow value of chat_column */

|

||||||

let flex_grow = conv_column.style.flexGrow;

|

let flex_grow = conv_column.style.flexGrow;

|

||||||

if (flex_grow == '0') {

|

if (flex_grow == "0") {

|

||||||

conv_column.style.flexGrow = '1';

|

conv_column.style.flexGrow = "1";

|

||||||

conv_column.style.minWidth = default_conv_column_min_width;

|

conv_column.style.minWidth = default_conv_column_min_width;

|

||||||

} else {

|

} else {

|

||||||

conv_column.style.flexGrow = '0';

|

conv_column.style.flexGrow = "0";

|

||||||

conv_column.style.minWidth = "0px";

|

conv_column.style.minWidth = "0px";

|

||||||

}

|

}

|

||||||

});

|

};

|

||||||

|

|

||||||

chat_column.insertBefore(chat_expand_button, chat_column.firstChild);

|

chat_column.insertBefore(chat_expand_button, chat_column.firstChild);

|

||||||

|

|

||||||

@@ -47,22 +64,34 @@ function run() {

|

|||||||

let mindmap_checkbox = document.getElementById("use-mindmap-checkbox");

|

let mindmap_checkbox = document.getElementById("use-mindmap-checkbox");

|

||||||

let citation_dropdown = document.getElementById("citation-dropdown");

|

let citation_dropdown = document.getElementById("citation-dropdown");

|

||||||

let chat_setting_panel = document.getElementById("chat-settings-expand");

|

let chat_setting_panel = document.getElementById("chat-settings-expand");

|

||||||

chat_setting_panel.insertBefore(mindmap_checkbox, chat_setting_panel.childNodes[2]);

|

chat_setting_panel.insertBefore(

|

||||||

|

mindmap_checkbox,

|

||||||

|

chat_setting_panel.childNodes[2]

|

||||||

|

);

|

||||||

chat_setting_panel.insertBefore(citation_dropdown, mindmap_checkbox);

|

chat_setting_panel.insertBefore(citation_dropdown, mindmap_checkbox);

|

||||||

|

|

||||||

|

// move share conv checkbox

|

||||||

|

let report_div = document.querySelector(

|

||||||

|

"#report-accordion > div:nth-child(3) > div:nth-child(1)"

|

||||||

|

);

|

||||||

|

let share_conv_checkbox = document.getElementById("is-public-checkbox");

|

||||||

|

if (share_conv_checkbox) {

|

||||||

|

report_div.insertBefore(share_conv_checkbox, report_div.querySelector("button"));

|

||||||

|

}

|

||||||

|

|

||||||

// create slider toggle

|

// create slider toggle

|

||||||

const is_public_checkbox = document.getElementById("is-public-checkbox");

|

const is_public_checkbox = document.getElementById("suggest-chat-checkbox");

|

||||||

const label_element = is_public_checkbox.getElementsByTagName("label")[0];

|

const label_element = is_public_checkbox.getElementsByTagName("label")[0];

|

||||||

const checkbox_span = is_public_checkbox.getElementsByTagName("span")[0];

|

const checkbox_span = is_public_checkbox.getElementsByTagName("span")[0];

|

||||||

new_div = document.createElement("div");

|

new_div = document.createElement("div");

|

||||||

|

|

||||||

label_element.classList.add("switch");

|

label_element.classList.add("switch");

|

||||||

is_public_checkbox.appendChild(checkbox_span);

|

is_public_checkbox.appendChild(checkbox_span);

|

||||||

label_element.appendChild(new_div)

|

label_element.appendChild(new_div);

|

||||||

|

|

||||||

// clpse

|

// clpse

|

||||||

globalThis.clpseFn = (id) => {

|

globalThis.clpseFn = (id) => {

|

||||||

var obj = document.getElementById('clpse-btn-' + id);

|

var obj = document.getElementById("clpse-btn-" + id);

|

||||||

obj.classList.toggle("clpse-active");

|

obj.classList.toggle("clpse-active");

|

||||||

var content = obj.nextElementSibling;

|

var content = obj.nextElementSibling;

|

||||||

if (content.style.display === "none") {

|

if (content.style.display === "none") {

|

||||||

@@ -70,29 +99,29 @@ function run() {

|

|||||||

} else {

|

} else {

|

||||||

content.style.display = "none";

|

content.style.display = "none";

|

||||||

}

|

}

|

||||||

}

|

};

|

||||||

|

|

||||||

// store info in local storage

|

// store info in local storage

|

||||||

globalThis.setStorage = (key, value) => {

|

globalThis.setStorage = (key, value) => {

|

||||||

localStorage.setItem(key, value)

|

localStorage.setItem(key, value);

|

||||||

}

|

};

|

||||||

globalThis.getStorage = (key, value) => {

|

globalThis.getStorage = (key, value) => {

|

||||||

item = localStorage.getItem(key);

|

item = localStorage.getItem(key);

|

||||||

return item ? item : value;

|

return item ? item : value;

|

||||||

}

|

};

|

||||||

globalThis.removeFromStorage = (key) => {

|

globalThis.removeFromStorage = (key) => {

|

||||||

localStorage.removeItem(key)

|

localStorage.removeItem(key);

|

||||||

}

|

};

|

||||||

|

|

||||||

// Function to scroll to given citation with ID

|

// Function to scroll to given citation with ID

|

||||||

// Sleep function using Promise and setTimeout

|

// Sleep function using Promise and setTimeout

|

||||||

function sleep(ms) {

|

function sleep(ms) {

|

||||||

return new Promise(resolve => setTimeout(resolve, ms));

|

return new Promise((resolve) => setTimeout(resolve, ms));

|

||||||

}

|

}

|

||||||

|

|

||||||

globalThis.scrollToCitation = async (event) => {

|

globalThis.scrollToCitation = async (event) => {

|

||||||

event.preventDefault(); // Prevent the default link behavior

|

event.preventDefault(); // Prevent the default link behavior

|

||||||

var citationId = event.target.getAttribute('id');

|

var citationId = event.target.getAttribute("id");

|

||||||

|

|

||||||

await sleep(100); // Sleep for 100 milliseconds

|

await sleep(100); // Sleep for 100 milliseconds

|

||||||

|

|

||||||

@@ -110,8 +139,148 @@ function run() {

|

|||||||

detail_elem.getElementsByClassName("pdf-link").item(0).click();

|

detail_elem.getElementsByClassName("pdf-link").item(0).click();

|

||||||

} else {

|

} else {

|

||||||

if (citation) {

|

if (citation) {

|

||||||

citation.scrollIntoView({ behavior: 'smooth' });

|

citation.scrollIntoView({ behavior: "smooth" });

|

||||||

|

}

|

||||||

|

}

|

||||||

|

};

|

||||||

|

|

||||||

|

globalThis.fullTextSearch = () => {

|

||||||

|

// Assign text selection event to last bot message

|

||||||

|

var bot_messages = document.querySelectorAll(

|

||||||

|

"div#main-chat-bot div.message-row.bot-row"

|

||||||

|

);

|

||||||

|

var last_bot_message = bot_messages[bot_messages.length - 1];

|

||||||

|

|

||||||

|

// check if the last bot message has class "text_selection"

|

||||||

|

if (last_bot_message.classList.contains("text_selection")) {

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

|

||||||

|

// assign new class to last message

|

||||||

|

last_bot_message.classList.add("text_selection");

|

||||||

|

|

||||||

|

// Get sentences from evidence div

|

||||||

|

var evidences = document.querySelectorAll(

|

||||||

|

"#html-info-panel > div:last-child > div > details.evidence div.evidence-content"

|

||||||

|

);

|

||||||

|

console.log("Indexing evidences", evidences);

|

||||||

|

|

||||||

|

const segmenterEn = new Intl.Segmenter("en", { granularity: "sentence" });

|

||||||

|

// Split sentences and save to all_segments list

|

||||||

|

var all_segments = [];

|

||||||

|

for (var evidence of evidences) {

|

||||||

|

// check if <details> tag is open

|

||||||

|

if (!evidence.parentElement.open) {

|

||||||

|

continue;

|

||||||

|

}

|

||||||

|

var markmap_div = evidence.querySelector("div.markmap");

|

||||||

|

if (markmap_div) {

|

||||||

|

continue;

|

||||||

|

}

|

||||||

|

|

||||||

|

var evidence_content = evidence.textContent.replace(/[\r\n]+/g, " ");

|

||||||

|

sentence_it = segmenterEn.segment(evidence_content)[Symbol.iterator]();

|

||||||

|

while ((sentence = sentence_it.next().value)) {

|

||||||

|

segment = sentence.segment.trim();

|

||||||

|

if (segment) {

|

||||||

|

all_segments.push({

|

||||||

|

id: all_segments.length,

|

||||||

|

text: segment,

|

||||||

|

});

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

let miniSearch = new MiniSearch({

|

||||||

|

fields: ["text"], // fields to index for full-text search

|

||||||

|

storeFields: ["text"],

|

||||||

|

});

|

||||||

|

|

||||||

|

// Index all documents

|

||||||

|

miniSearch.addAll(all_segments);

|

||||||

|

|

||||||

|

last_bot_message.addEventListener("mouseup", () => {

|

||||||

|

let selection = window.getSelection().toString();

|

||||||

|

let results = miniSearch.search(selection);

|

||||||

|

|

||||||

|

if (results.length == 0) {

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

let matched_text = results[0].text;

|

||||||

|

console.log("query\n", selection, "\nmatched text\n", matched_text);

|

||||||

|

|

||||||

|

var evidences = document.querySelectorAll(

|

||||||

|

"#html-info-panel > div:last-child > div > details.evidence div.evidence-content"

|

||||||

|

);

|

||||||

|

// check if modal is open

|

||||||

|

var modal = document.getElementById("pdf-modal");

|

||||||

|

|

||||||

|

// convert all <mark> in evidences to normal text

|

||||||

|

evidences.forEach((evidence) => {

|

||||||

|

evidence.querySelectorAll("mark").forEach((mark) => {

|

||||||

|

mark.outerHTML = mark.innerText;

|

||||||

|

});

|

||||||

|

});

|

||||||

|

|

||||||

|

// highlight matched_text in evidences

|

||||||

|

for (var evidence of evidences) {

|

||||||

|

var evidence_content = evidence.textContent.replace(/[\r\n]+/g, " ");

|

||||||

|

if (evidence_content.includes(matched_text)) {

|

||||||

|

// select all p and li elements

|

||||||

|

paragraphs = evidence.querySelectorAll("p, li");

|

||||||

|

for (var p of paragraphs) {

|

||||||

|

var p_content = p.textContent.replace(/[\r\n]+/g, " ");

|

||||||

|

if (p_content.includes(matched_text)) {

|

||||||

|

p.innerHTML = p_content.replace(

|

||||||

|

matched_text,

|

||||||

|

"<mark>" + matched_text + "</mark>"

|

||||||

|

);

|

||||||

|

console.log("highlighted", matched_text, "in", p);

|

||||||

|

if (modal.style.display == "block") {

|

||||||

|

// trigger on click event of PDF Preview link

|

||||||

|

var detail_elem = p;

|

||||||

|

// traverse up the DOM tree to find the parent element with tag detail

|

||||||

|

while (detail_elem.tagName.toLowerCase() != "details") {

|

||||||

|

detail_elem = detail_elem.parentElement;

|

||||||

|

}

|

||||||

|

detail_elem.getElementsByClassName("pdf-link").item(0).click();

|

||||||

|

} else {

|

||||||

|

p.scrollIntoView({ behavior: "smooth", block: "center" });

|

||||||

|

}

|

||||||

|

break;

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

});

|

||||||

|

};

|

||||||

|

|

||||||

|

globalThis.spawnDocument = (content, options) => {

|

||||||

|

let opt = {

|

||||||

|

window: "",

|

||||||

|

closeChild: true,

|

||||||

|

childId: "_blank",

|

||||||

|

};

|

||||||

|

Object.assign(opt, options);

|

||||||

|

// minimal error checking

|

||||||

|

if (

|

||||||

|

content &&

|

||||||

|

typeof content.toString == "function" &&

|

||||||

|

content.toString().length

|

||||||

|

) {

|

||||||

|

let child = window.open("", opt.childId, opt.window);

|

||||||

|

child.document.write(content.toString());

|

||||||

|

if (opt.closeChild) child.document.close();

|

||||||

|

return child;

|

||||||

|

}

|

||||||

|

};

|

||||||

|

|

||||||

|

globalThis.fillChatInput = (event) => {

|

||||||

|

let chatInput = document.querySelector("#chat-input textarea");

|

||||||

|

// fill the chat input with the clicked div text

|

||||||

|

chatInput.value = "Explain " + event.target.textContent;

|

||||||

|

var evt = new Event("change");

|

||||||

|

chatInput.dispatchEvent(new Event("input", { bubbles: true }));

|

||||||

|

chatInput.focus();

|

||||||

|

};

|

||||||

|

}

|

||||||

|

|||||||

@@ -17,7 +17,7 @@ function onBlockLoad () {

|

|||||||

<span class="close" id="modal-expand">⛶</span>

|

<span class="close" id="modal-expand">⛶</span>

|

||||||

</div>

|

</div>

|

||||||

<div class="modal-body">

|

<div class="modal-body">

|

||||||

<pdfjs-viewer-element id="pdf-viewer" viewer-path="GRADIO_ROOT_PATH/file=PDFJS_PREBUILT_DIR" locale="en" phrase="true">

|

<pdfjs-viewer-element id="pdf-viewer" viewer-path="GR_FILE_ROOT_PATH/file=PDFJS_PREBUILT_DIR" locale="en" phrase="true">

|

||||||

</pdfjs-viewer-element>

|

</pdfjs-viewer-element>

|

||||||

</div>

|

</div>

|

||||||

</div>

|

</div>

|

||||||

@@ -51,28 +51,65 @@ function onBlockLoad () {

|

|||||||

modal.style.height = "85dvh";

|

modal.style.height = "85dvh";

|

||||||

}

|

}

|

||||||

};

|

};

|

||||||

|

};

|

||||||

|

|

||||||

|

function matchRatio(str1, str2) {

|

||||||

|

let n = str1.length;

|

||||||

|

let m = str2.length;

|

||||||

|

|

||||||

|

let lcs = [];

|

||||||

|

for (let i = 0; i <= n; i++) {

|

||||||

|

lcs[i] = [];

|

||||||

|

for (let j = 0; j <= m; j++) {

|

||||||

|

lcs[i][j] = 0;

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

globalThis.compareText = (search_phrase, page_label) => {

|

let result = "";

|

||||||

var iframe = document.querySelector("#pdf-viewer").iframe;

|

let max = 0;

|

||||||

var innerDoc = (iframe.contentDocument) ? iframe.contentDocument : iframe.contentWindow.document;

|

for (let i = 0; i < n; i++) {

|

||||||

|

for (let j = 0; j < m; j++) {

|

||||||

|

if (str1[i] === str2[j]) {

|

||||||

|

lcs[i + 1][j + 1] = lcs[i][j] + 1;

|

||||||

|

if (lcs[i + 1][j + 1] > max) {

|

||||||

|

max = lcs[i + 1][j + 1];

|

||||||

|

result = str1.substring(i - max + 1, i + 1);

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

var query_selector = (

|

return result.length / Math.min(n, m);

|

||||||

|

}

|

||||||

|

|

||||||

|

globalThis.compareText = (search_phrases, page_label) => {

|

||||||

|

var iframe = document.querySelector("#pdf-viewer").iframe;

|

||||||

|

var innerDoc = iframe.contentDocument

|

||||||

|

? iframe.contentDocument

|

||||||

|

: iframe.contentWindow.document;

|

||||||

|

|

||||||

|

var renderedPages = innerDoc.querySelectorAll("div#viewer div.page");

|

||||||

|

if (renderedPages.length == 0) {

|

||||||

|

// if pages are not rendered yet, wait and try again

|

||||||

|

setTimeout(() => compareText(search_phrases, page_label), 2000);

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

|

||||||

|

var query_selector =

|

||||||

"#viewer > div[data-page-number='" +

|

"#viewer > div[data-page-number='" +

|

||||||

page_label +

|

page_label +

|

||||||

"'] > div.textLayer > span"

|

"'] > div.textLayer > span";

|

||||||

);

|

|

||||||

var page_spans = innerDoc.querySelectorAll(query_selector);

|

var page_spans = innerDoc.querySelectorAll(query_selector);

|

||||||

for (var i = 0; i < page_spans.length; i++) {

|

for (var i = 0; i < page_spans.length; i++) {

|

||||||

var span = page_spans[i];

|

var span = page_spans[i];

|

||||||

if (

|

if (

|

||||||

span.textContent.length > 4 &&

|

span.textContent.length > 4 &&

|

||||||

(

|

search_phrases.some(

|

||||||